According to the 2020 Jetbrains developer survey 44% of developers are now using some form of continuous integration and deployment with Docker Containers. We know a ton of developers have got this setup using Docker Hub as their container registry for part of their workflow so we decided to dig out the best practices for doing this and provide some guidance for how to get started. To support this we will be publishing a series of blog posts over the next few weeks to answer the common questions we see with the top CI providers.

We have also heard feedback that given the changes Docker introduced relating to network egress and the number of pulls for free users, that there are questions around the best way to use Docker Hub as part of CI/CD workflows without hitting these limits. This blog post covers best practices that improve your experience and uses a sensible consumption of Docker Hub which will mitigate the risk of hitting these limits and how to increase the limits depending on your use case.

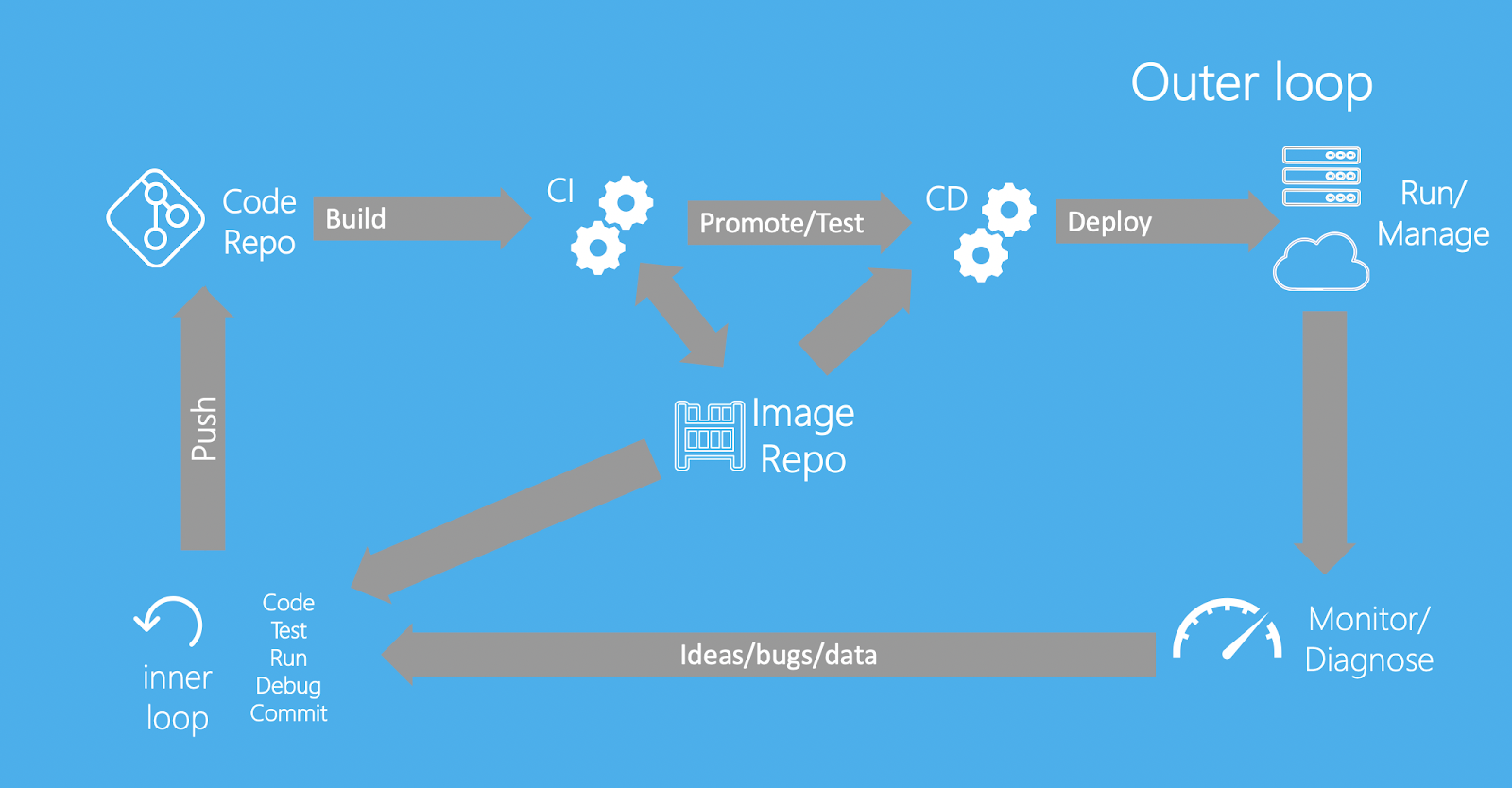

To get started, one of the most important things when working with Docker and really any CI/CD is to work out when you need to test with the CI or when you can do this locally. At Docker we think about how developers work in terms of their inner loop (code, build, run, test) and their outer loop (push change, CI build, CI test, deployment)

Before you think about optimizing your CI/CD, it is always important to think about your inner loop and how it relates to the outer loop (the CI). We know that most people aren’t a fan of ‘debugging via the CI’, so it is always better if your inner loop and outer loop are as similar as possible. To this end it can be a good idea to run unit tests as part of your docker build command by adding a target for them in your Dockerfile. That way as you are making changes and re-building locally you can run the same unit tests you would run in the CI on your local machine with a simple command. Chris wrote a blog post earlier in the year about Go development with Docker, this is a great example of how you can use tests in your Docker project and re-use them in the CI. This creates a shorter feedback loop on issues and reduces the amount of pulls and builds your CI needs to do.

Once you get into your actual outer loop and Docker Hub, there are a few things we can do to get the most of your CI and deliver the fastest Docker experience.

Firstly and foremost stay secure! When you are setting up your CI make sure you are using a Docker Hub access token rather than your password, you can create new access tokens from your security page on Docker Hub.

Once you have this and have added it to whatever secrets store is available on your platform you will want to look at when you decide to push and pull in your CI/CD along with where from depending on the change you are making. The first thing you can do here to reduce the build time and reduce your number of calls is make use of the build cache to reuse layers you have already pulled. This can be done on many platforms by using buildX (buildkits) caching functionality and whatever cache your platform provides.

The other change you may want to make is only have your release images go to DockerHub, this would mean setting up functions to push your PR images to a more local image store to be quickly pulled and tested rather than promoting them all the way to production.

We know there are a lot more tips and tricks for using Docker in CI but really looking at how to do this around the recent Hub rate changes we think these are the top things you can do.

If you are still finding you have issues with Pull limits once you are authenticated you can consider upgrading to either a Pro or a Team account. This will give you 50,000 pulls in a 24 hour period along with unlimited image retention. In the near future this will also include Image Scanning (powered by Snyk) on push of new images to Docker Hub.

Look out for the next blog post in the series about how to put some of these practices into place with Github actions and feel free to give us ideas of what CI providers you would like to see us covering by dropping us a message on Twitter @Docker.