We are happy to have another great AI/ML story to share from our community. In this blog post, MA Raza, Ph.D., provides a guide to building and deploying a LangChain-powered chat app with Docker and Streamlit.

This article reinforces the value that Docker brings to AI/ML projects — the speed and consistency of deployment, the ability to build once and run anywhere, and the time-saving tools available in Docker Desktop that accelerate the overall development workflow.

In this article, we will explore the process of creating a chat app using LangChain, OpenAI API, and Streamlit frameworks. We will demonstrate the use of Docker and Docker Compose for easy deployment of the app on either in-house or cloud servers.

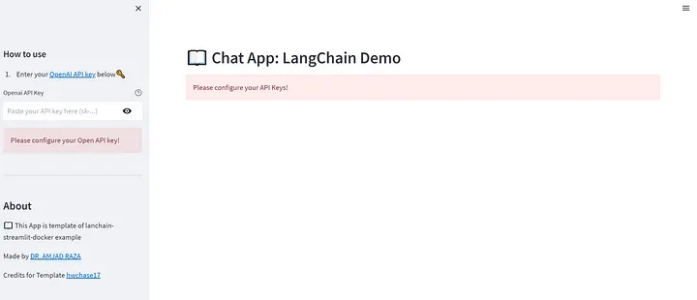

We have created and deployed a demo app (Figure 1) on Streamlit Public Cloud and Google App Engine for a quick preview.

We’ve developed a GitHub project (Figure 2) that includes comprehensive instructions and the demo chat app that runs on LangChain. We’ve also configured the Poetry framework for the Python Environment Manager.

Chat app components and technologies

We’ll briefly describe the app components and frameworks utilized to create the template app.

LangChain Python framework

The LangChain framework enables developers to create applications using powerful large language models (LLMs). Our demo chat app is built on a Python-based framework, with the OpenAI model as the default option. However, users have the flexibility to choose any LLM they prefer.

The LongChain framework effortlessly manages input prompts and establishes connections between responses generated from LLMs APIs.

OpenAI model

For demonstration purposes, we are using OpenAI API to generate responses upon submission of prompts.

Frontend Streamlit UI

Streamlit is a lightweight and faster way of building and sharing data apps. A simple UI with a Streamlit framework is developed to interact with the chat app.

Deployment with Docker

Docker is useful in developing and deploying apps to any server without worrying about dependencies and environments. After the demo app is developed and running fine locally, we have added Docker support.

FROM python:3.10-slim-bullseye

ENV HOST=0.0.0.0

ENV LISTEN_PORT 8080

EXPOSE 8080

RUN apt-get update && apt-get install -y git

COPY ./requirements.txt /app/requirements.txt

RUN pip install --no-cache-dir --upgrade -r /app/requirements.txt

WORKDIR app/

COPY ./demo_app /app/demo_app

COPY ./.streamlit /app/.streamlit

CMD ["streamlit", "run", "demo_app/main.py", "--server.port", "8080"]

The previous code shows the contents of the Dockerfile used to generate the Docker image of the demo app. To build the image, we use:

docker build -t langchain-chat-app .

Docker optimization for light and fast builds

When deploying apps for enterprise applications, we have to be mindful of the resources being utilized and the execution/deploying life cycle computations.

We have also addressed the concerns on how to optimize the Docker build process to solve the problem of image size and build it fast for every iteration of source change etc. Refer to the “Blazing fast Python Docker builds with Poetry” article for details on various tricks for optimizing Docker.

# The builder image, used to build the virtual environment

FROM python:3.11-buster as builder

RUN apt-get update && apt-get install -y git

RUN pip install poetry==1.4.2

ENV POETRY_NO_INTERACTION=1 \

POETRY_VIRTUALENVS_IN_PROJECT=1 \

POETRY_VIRTUALENVS_CREATE=1 \

POETRY_CACHE_DIR=/tmp/poetry_cache

ENV HOST=0.0.0.0

ENV LISTEN_PORT 8080

EXPOSE 8080

WORKDIR /app

#COPY pyproject.toml ./app/pyproject.toml

#COPY poetry.lock ./app/poetry.lock

COPY pyproject.toml poetry.lock ./

RUN poetry install --without dev --no-root && rm -rf $POETRY_CACHE_DIR

# The runtime image, used to just run the code provided its virtual environment

FROM python:3.11-slim-buster as runtime

ENV VIRTUAL_ENV=/app/.venv \

PATH="/app/.venv/bin:$PATH"

COPY --from=builder ${VIRTUAL_ENV} ${VIRTUAL_ENV}

COPY ./demo_app ./demo_app

COPY ./.streamlit ./.streamlit

CMD ["streamlit", "run", "demo_app/main.py", "--server.port", "8080"]

In this Dockerfile, we have two runtime image tags. In the first one, we create a Poetry environment to form a virtual environment. Although the app is run in the second runtime image, the application is run after activating the virtual environment created in the first step.

Next, we’ll build a Docker image using DOCKER_BUILDKIT, which offers modern tooling to create Docker Images quickly and securely.

DOCKER_BUILDKIT=1 docker build --target=runtime . -t langchain-chat-app:latest

Docker-compose.yaml file

To run the app, we have also included the docker-compose.yml with the following contents:

version: '3'

services:

langchain-chat-app:

image: langchain-chat-app:latest

build: ./app

command: streamlit run demo_app/main.py --server.port 8080

volumes:

- ./demo_app/:/app/demo_app

ports:

- 8080:8080

To run the app on a local server, use the following command:

docker-compose up

Infrastructures

With support for Docker, the app can be deployed to any cloud infrastructure by following basic guides. We deployed the app on the following infrastructures.

Streamlit Public Cloud

Deploying Streamlit App on its public cloud is pretty straightforward with a GitHub account and repository. A deployed app can be accessed the LangChain demo.

Google App Engine

We have tried deploying the app on Google App Engine using Docker. The repo includes an app.yaml configuration file to deploy the following contents:

# With Dockerfile

runtime: custom

env: flex

# This sample incurs costs to run on the App Engine flexible environment.

# The settings below are to reduce costs during testing and are not appropriate

# for production use. For more information, see:

# https://cloud.google.com/appengine/docs/flexible/python/configuring-your-app-with-app-yaml

manual_scaling:

instances: 1

resources:

cpu: 1

memory_gb: 0.5

disk_size_gb: 10

To deploy the chat app on Google App Engine, we used the following commands after installing the gcloud Python SDK:

gcloud app create --project=[YOUR_PROJECT_ID]

gcloud config set project [YOUR_PROJECT_ID]

gcloud app deploy app.yaml

A sample app deployed on Google App Engine (Figure 3) can be accessed through:

Deploying the app using Google Cloud Run

We can also deploy the app on Google Cloud using the Cloud Run Service of GCP. Deploying an app using Cloud Run is faster than Google App Engine.

Here are the relevant features of adopting this method:

- Package the application in a container.

- Push the container to the artifacts registry.

- Deploy the service from the pushed container.

Let’s go through the steps being followed to deploy the app using Google Cloud Run. We assume a project is created on Google Cloud already.

1. Enable the service:

You can enable the services using gcloud sdk:

gcloud services enable cloudbuild.googleapis.com

gcloud services enable run.googleapis.com

2. Create and add roles to service account:

With the following set of commands, we create a service account and set appropriate permissions. Modify the service SERVICE_ACCOUNT and PROJECT_ID:

gcloud iam service-accounts create langchain-app-cr \

--display-name="langchain-app-cr"

gcloud projects add-iam-policy-binding langchain-chat \

--member="serviceAccount:langchain-app-cr@langchain-chat.iam.gserviceaccount.com" \

--role="roles/run.invoker"

gcloud projects add-iam-policy-binding langchain-chat \

--member="serviceAccount:langchain-app-cr@langchain-chat.iam.gserviceaccount.com" \

--role="roles/serviceusage.serviceUsageConsumer"

gcloud projects add-iam-policy-binding langchain-chat \

--member="serviceAccount:langchain-app-cr@langchain-chat.iam.gserviceaccount.com" \

--role="roles/run.admin"

3. Generate and push the Docker image:

Using the following commands, we can generate and push the image to the Artifacts Registry. However, if this is the first time, we need to create the repository with permissions for the Docker placeholder:

DOCKER_BUILDKIT=1 docker build --target=runtime . -t australia-southeast1-docker.pkg.dev/langchain-chat/app/langchain-chat-app:latest

docker push australia-southeast1-docker.pkg.dev/langchain-chat/app/langchain-chat-app:latest

Here are the required commands to generate the Artifacts Repository and assign permissions:

gcloud auth configure-docker australia-southeast1-docker.pkg.dev

gcloud artifacts repositories create app \

--repository-format=docker \

--location=australia-southeast1 \

--description="A Langachain Streamlit App" \

--async

The app will now be deployed.

Conclusion

This article delves into the various tools and technologies required for developing and deploying a chat app that is powered by LangChain, OpenAI API, and Streamlit. The Docker framework is also utilized in the process.

The application demonstration is available on both Streamlit Public Cloud and Google App Engine. Thanks to Docker support, developers can deploy it on any cloud platform they prefer.

This project can serve as a foundational template for rapidly developing apps that utilize the capabilities of LLMs. You can find more of Raza’s projects on his GitHub page.

Do you have an interesting use case or story about Docker in your AI/ML workflow? We would love to hear from you and maybe even share your story.

This post was originally published on Level Up Coding and is reprinted with permission.

Learn more

- Get the latest release of Docker Desktop.

- Vote on what’s next! Check out our public roadmap.

- Have questions? The Docker community is here to help.

- New to Docker? Get started.