Every now and then, there are waves of technology that threaten to make the previous generation of technology obsolete. There has been a lot of talk about a technique called “serverless” for writing apps. The idea is to deploy your application as a series of functions, which are called on-demand when they need to be run. You don’t need to worry about managing servers, and these functions scale as much as you need, because they are called on-demand and run on a cluster.

But serverless doesn’t mean there is no Docker – in fact, Docker is serverless. You can use Docker to containerize these functions, then run them on-demand on a Swarm. Serverless is a technique for building distributed apps and Docker is the perfect platform for building them on.

From servers to serverless

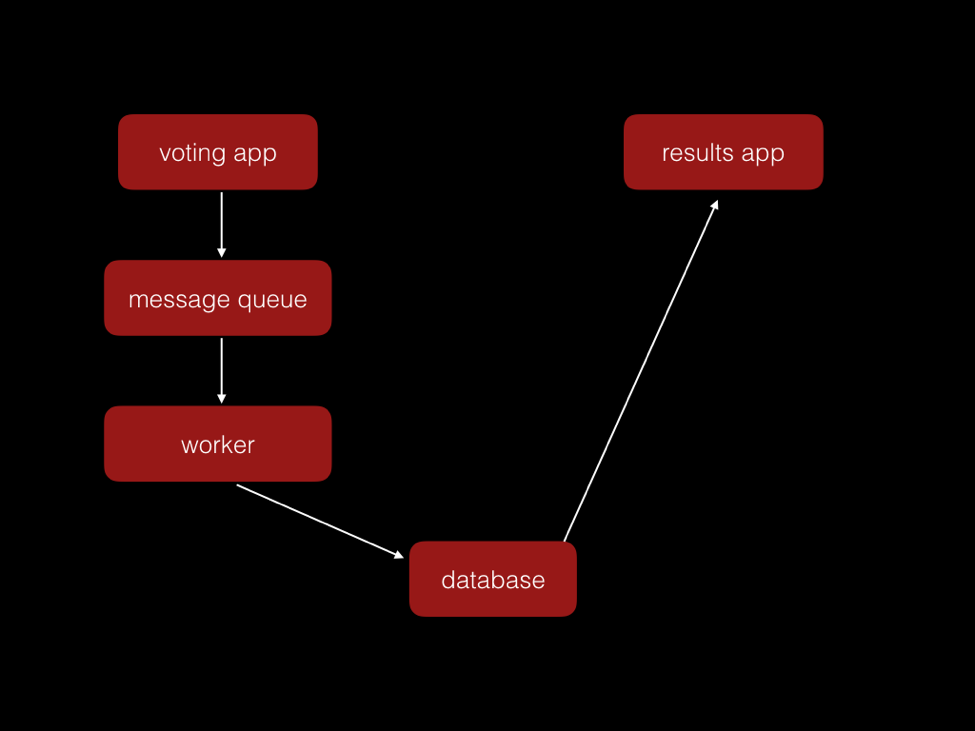

So how might we write applications like this? Let’s take our example a voting application consisting of 5 services:

This consists of:

- Two web frontends

- A worker for processing votes in the background

- A message queue for processing votes

- A database

The background processing of votes is a very easy target for conversion to a serverless architecture. In the voting app, we can run a bit of code like this to run the background task:

import dockerrun

client = dockerrun.from_env()

client.run("bfirsh/serverless-record-vote-task", [voter_id, vote], detach=True)

The worker and message queue can be replaced with a Docker container that is run on-demand on a Swarm, automatically scaling to demand.

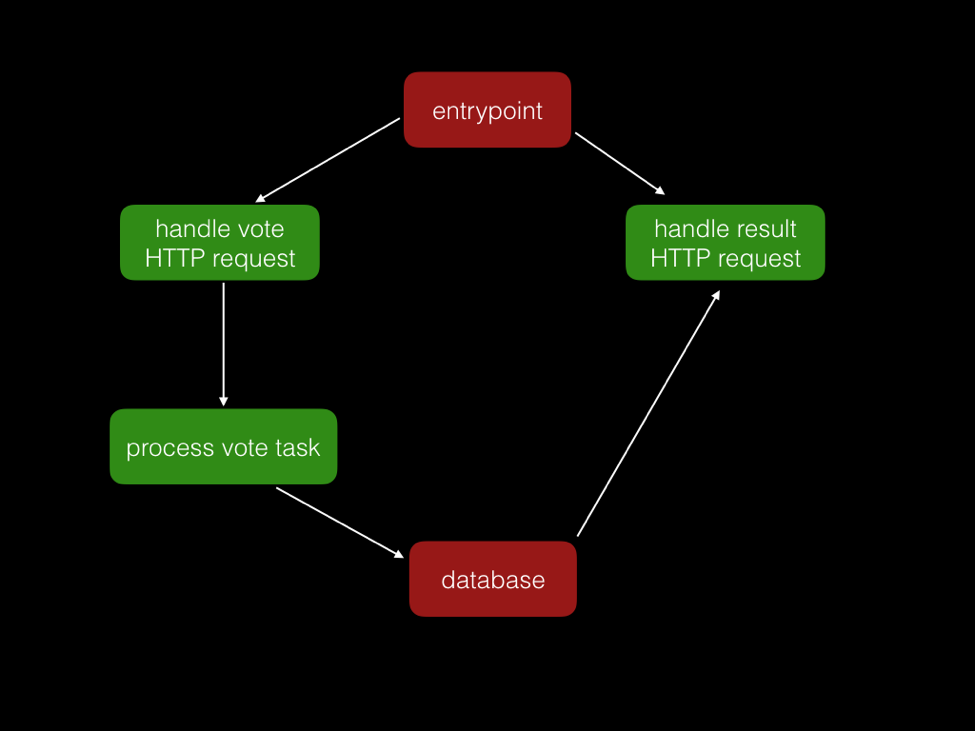

We can even eliminate the web frontends. We can replace them with Docker containers that serve up a single HTTP request, triggered by a lightweight HTTP server that spins up Docker containers for each HTTP request. The heavy lifting has now moved the long-running HTTP server to Docker containers that run on-demand, so they can automatically scale to handle load.

Our new architecture looks something like this:

The red blocks are the continually running services and the green blocks are Docker containers that are run on-demand. This application has fewer long-running services that need managing, and by its very nature scales up automatically in response to demand (up to the size of your Swarm!).

So what can we do with this?

There are three useful techniques here which you can use in your apps:

- Run functions in your code as on-demand Docker containers

- Use a Swarm to run these on a cluster

- Run containers from containers, by passing a Docker API socket

With the combination of these techniques, this opens up loads of possibilities about how you can architect your applications. Running background work is a great example of something that works well, but a whole load of other things are possible too, for example:

- Launching a container to serve user-facing HTTP requests is probably not practical due to the latency. However – you could write a load balancer which knew how to auto-scale its own web frontends by running containers on a Swarm.

- A MongoDB container which could introspect the structure of a Swarm and launch the correct shards and replicas.

What’s next

We’ve got all these radically new tools and abstractions for building apps, and we’ve barely scratched the surface of what is possible with them. We’re still building applications like we have servers that stick around for a long time, not for the future where we have Swarms that can run code on-demand anywhere in your infrastructure.

This hopefully gives you some ideas about what you can build, but we also need your help. We have all the fundamentals to be able to start building these applications, but its still in its infrancy – we need better tooling, libraries, example apps, documentation, and so on.

This GitHub repository has links off to tools, libraries, examples, and blog posts. Head over there if you want to learn more, and please contribute any links you have there so we can start working together on this.

Get involved, and happy hacking!