This blog post was updated on October 25, 2024.

In today’s digital landscape, where user expectations for fast and personalized interactions are at an all-time high, conversational AI chatbots have transformed the way businesses engage with users. These AI-driven virtual assistants simulate human-like conversations and are designed to go beyond just answering questions — they can guide users through processes and even resolve issues autonomously.

A key component in building successful chatbots is effectively managing frequently asked questions (FAQs). However, with the rise of Retrieval-Augmented Generation (RAG) systems, businesses are moving beyond simple FAQ handling to more advanced solutions. RAG bots, especially extractive ones, excel in providing accurate and contextual answers by retrieving relevant information from vast datasets. For organizations looking for more than just basic interaction, platforms like Rasa offer tools that enable chatbots to take actionable steps, solve user problems, and lead users through complex workflows, which can elevate user satisfaction and empower businesses to automate more intricate tasks.

In this article, we’ll look at how to use the Rasa framework with Docker to build and deploy a containerized, conversational AI chatbot.

Meet Rasa

One option to address the complexities of handling Chatbots is Rasa, a conversational AI framework. While Rasa’s newer technologies aren’t fully open source, they offer solutions for creating advanced chatbots. Developers can access a free developer license that supports a few production conversations per month.

Rasa enables chatbots to understand natural language, extract important details, and respond intelligently based on the conversation’s context. With features like the LLM-native dialogue system CALM, Rasa goes beyond simple intent recognition to ensure our chatbot can navigate complex conversations effectively.

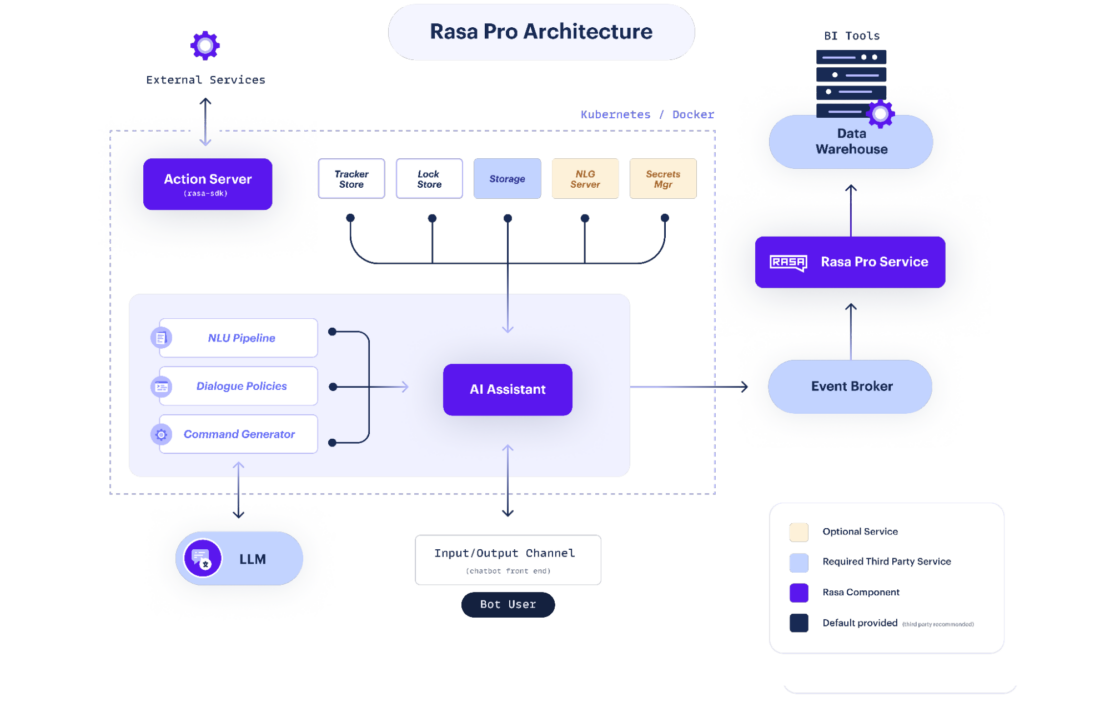

Rasa allows developers to build and deploy conversational AI chatbots and provides a flexible architecture and powerful dialogue-understanding capabilities (Figure 1).

Rasa provides a flexible and customizable architecture that gives developers full control over the chatbot’s behavior and capabilities when building conversational AI applications, including chatbots and virtual assistants.

Rasa’s dialogue system is a composite approach that uses the reasoning capabilities of an LLM to identify the best way to help the user, yet is regulated by rule-based dialogue flows that regulate how the assistant should engage with the user, ensuring business processes are followed faithfully, and the brand experience is consistent. This approach enables the creation of smarter, more resilient, and more fluent AI Assistants that can be precisely controlled, transparently explained, and effectively measured. By adhering to business-defined logic and opting to provide only canned responses, CALM assistants can ensure consistency and reliability in their interactions.

Additionally, Rasa can scale to handle large volumes of conversations and can be extended with custom actions, APIs, and external services. This capability allows you to integrate additional functionalities, such as database access, external API calls, and business logic, into your chatbot.

Why containerizing Rasa is important

Containerizing Rasa brings several important benefits to the process of developing and deploying conversational AI chatbots. Let’s look at four key reasons why containerizing Rasa is important:

1. Docker provides a consistent and portable environment for running applications.

By containerizing Rasa, you can package the chatbot application, its dependencies, and its runtime environment into a self-contained unit. This approach allows you to deploy the containerized Rasa chatbot across different environments, such as development machines, staging servers, and production clusters, with minimal configuration or compatibility issues.

Docker simplifies the management of dependencies for the Rasa chatbot. By encapsulating all the required libraries, packages, and configurations within the container, you can avoid conflicts with other system dependencies and ensure that the chatbot has access to the specific versions of libraries it needs. This containerization eliminates the need for manual installation and configuration of dependencies on different systems, making the deployment process more streamlined and reliable.

2. Docker ensures the reproducibility of your Rasa chatbot’s environment.

By defining the exact dependencies, libraries, and configurations within the container, you can guarantee that the chatbot will run consistently across different deployments.

3. Docker enables seamless scalability of the Rasa chatbot.

With containers, you can easily replicate and distribute instances of the chatbot across multiple nodes or servers, allowing you to handle high volumes of user interactions.

4. Docker provides isolation between the chatbot and the host system and between different containers running on the same host.

This isolation ensures that the chatbot’s dependencies and runtime environment do not interfere with the host system or other applications. It also allows for easy dependencies and versioning management, preventing conflicts and ensuring a clean and isolated environment in which the chatbot can operate.

Building an ML model demo application

By combining the power of Rasa and Docker, developers can create an LLM-powered chatbot demo that excels in handling frequently asked questions. The demo can be trained on a dataset of common queries and their corresponding answers, allowing the chatbot to understand and respond to similar questions with high accuracy.

What are we building?

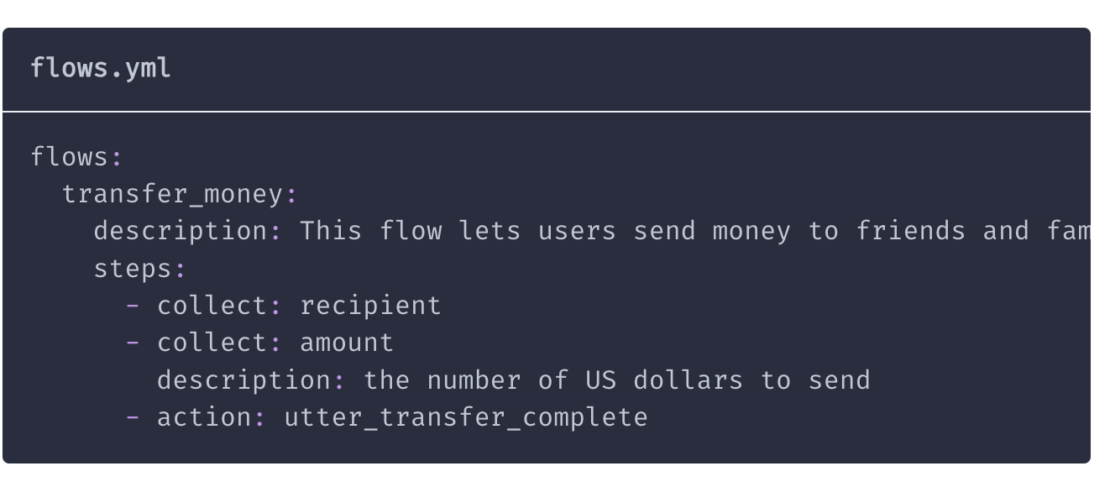

In this guide, we will create a language model-powered assistant capable of smoothly handling a money transfer while seamlessly executing your business logic and maintaining natural, conversational interactions. Let’s jump in.

Getting started

The following key components are essential to completing this walkthrough:

Deploying an ML FAQ demo app is a simple process involving the following steps:

- Clone the repository.

- Set up the configuration files.

- Initialize Rasa.

- Train and run the model.

- Bring up the WebChat UI app.

We’ll explain each of these steps below.

Cloning the project

To get started, you can clone the repository:

git clone https://github.com/dockersamples/docker-ml-faq-rasa

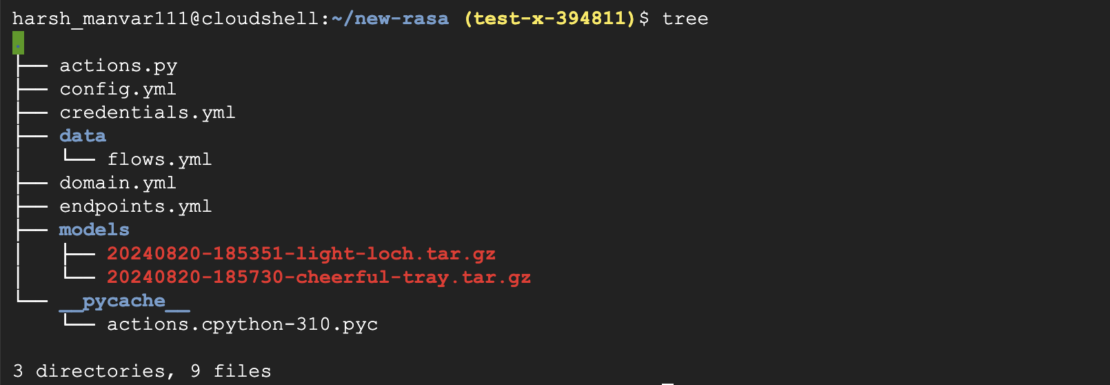

Before we move to the next step, let’s look at each of the files one by one.

File: domain.yml

This file describes what your assistant can do, how it responds, and what information it can remember or use during conversations.

File: data/flows.yml

It sets the guidelines for how your assistant should operate and make decisions. In later sections, we’ll go over the step-by-step processes.

Here’s a breakdown of the steps for the training stories in the file:

Story: Happy path

- User greets with an intent: greet

File: config.yml

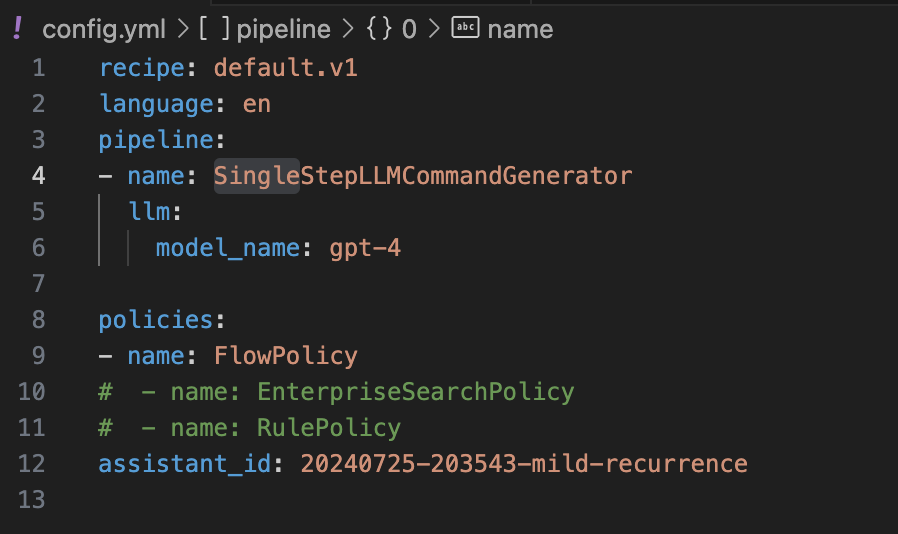

The configuration parameters for your Rasa project are contained in this file. Here is a breakdown of the configuration file:

1. Assistant ID:

Assistant_id:placeholder_default- This placeholder value should be replaced with a unique identifier for your assistant.

2. Rasa NLU configuration:

- Language:

en- Specifies the language used for natural language understanding.

- Pipeline:

- The pipeline is a sequence of processing steps that your assistant follows to interpret user input and generate responses.

SingleStepLLMCommandGenerator: This is one of the steps in the pipeline, which is responsible for generating commands using a Large Language Model (LLM), specifically the GPT-4 model. This could be used to enhance the assistant’s ability to understand and respond to complex queries.

3. Rasa core configuration:

- Policies:

- These are the rules or strategies your assistant uses to decide what actions to take in different situations.

FlowPolicy: This policy manages the conversation flow, ensuring that the assistant follows a logical sequence when interacting with users.- The commented-out policies (like

EnterpriseSearchPolicyandRulePolicy) are optional policies that you could enable if needed. They handle things like integrating with enterprise search systems or enforcing specific rules during the conversation.

File: actions.py

The custom actions that your chatbot can execute are contained in this file. Retrieving data from an API, communicating with a database, or doing any other unique business logic are all examples of actions.

Explanation of the code:

- The

ActionHelloWorldclass extends theActionclass provided by the rasa_sdk. - The

namemethod defines the name of the custom action, which in this case isaction_hello_world. - The

runmethod is where the logic for the custom action is implemented. - Within the

runmethod, the dispatcher object is used to send a message back to the user. In this example, the message sent isHello World!. - The return

[]statement indicates that the custom action has completed its execution.

File: endpoints.yml

The endpoints for your chatbot are specified in this file, including any external services or the webhook URL for any custom actions.

Initializing Rasa

Prerequisite :

- Rasa Pro license key – Request page (Free)

- API key of OpenAI or other LLM provider

- Rasa versions >= 3.8.0

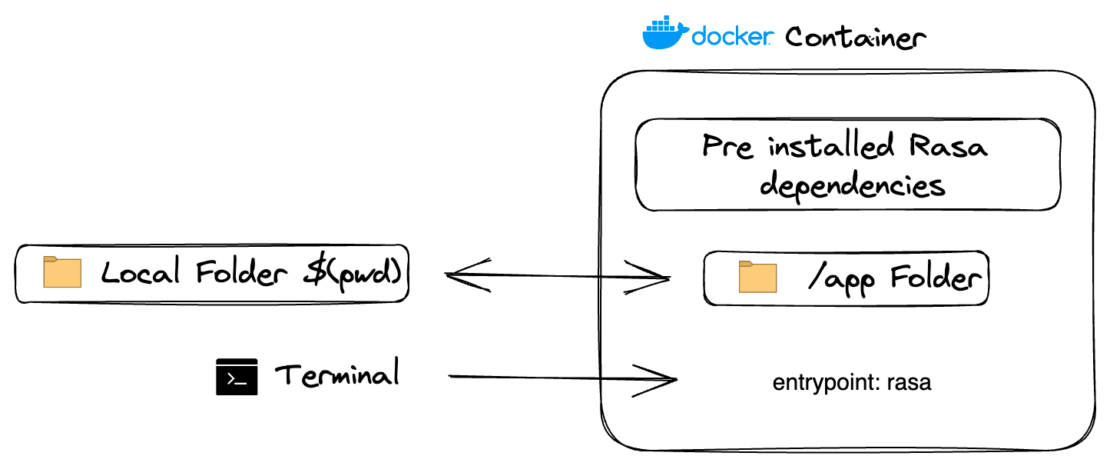

This command initializes a new Rasa project in the current directory ($(pwd)):

docker run -v ./:/app -e RASA_PRO_LICENSE=${RASA_PRO_LICENSE} -e OPENAI_API_KEY=${OPENAI_API_KEY} europe-west3-docker.pkg.dev/rasa-releases/rasa-pro/rasa-pro:3.9.3 init --template tutorial --no-prompt

Replace environment variables values RASA_PRO_LICENSE, OPENAI_API_KEY

It sets up the basic directory structure and creates essential files for a Rasa project, such as actions.yml, domain.yml, and data/flows.yml. The -p flag maps port 5005 inside the container to the same port on the host, allowing you to access the Rasa server. <IMAGE>:3.9.3 refers to the Docker image for the specific version of Rasa you want to use.

Training the model

The following command trains a Rasa model using the data and configuration specified in the project directory:

docker run -v ./:/app -e RASA_PRO_LICENSE=${RASA_PRO_LICENSE} -e OPENAI_API_KEY=${OPENAI_API_KEY} europe-west3-docker.pkg.dev/rasa-releases/rasa-pro/rasa-pro:3.9.3 init --template tutorial --no-prompt

The -v flag mounts the current directory ($(pwd)) inside the container, allowing access to the project files. The train flag specifies which automatically finds config files and starts the training model.

Inspecting the model

This command runs the trained Rasa model in interactive mode, enabling you to test the chatbot’s responses:

docker run -p 5005:5005 -v ./:/app \

-e RASA_PRO_LICENSE=${RASA_PRO_LICENSE} \

-e OPENAI_API_KEY=${OPENAI_API_KEY} \

europe-west3-docker.pkg.dev/rasa-releases/rasa-pro/rasa-pro:3.9.3 \

inspect

The command loads the trained model from the models directory in the current project directory ($(pwd)). The chatbot will be accessible in the terminal, allowing you to have interactive conversations and see the model’s responses.

Verify Rasa is running. Now you can send the message and test your model with curl.

curl http://localhost:5005

Hello from Rasa: 3.9.3

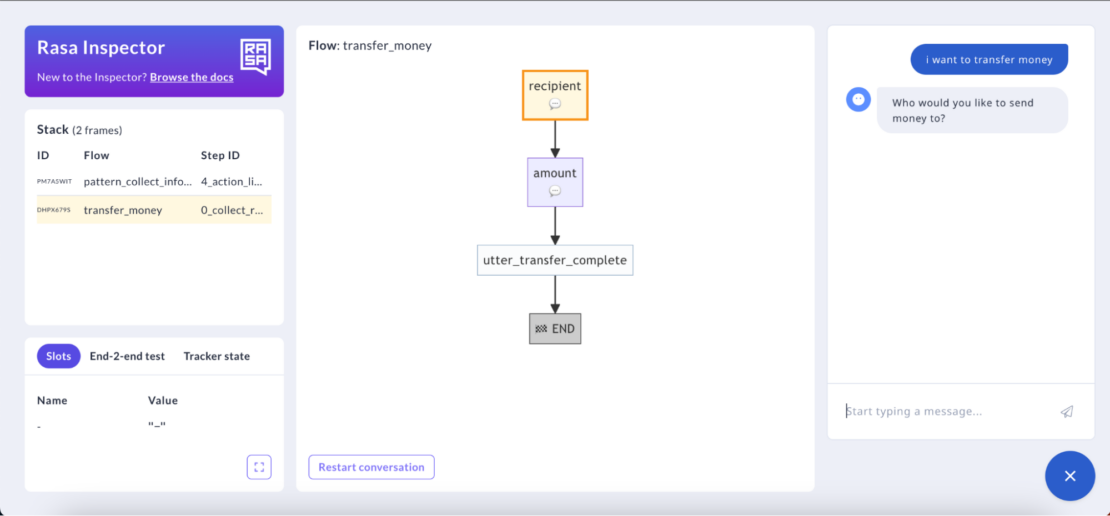

Using Inspector

The above command runs the trained Rasa model as a server accessible via a web UI to inspect and test. Open the URL in the browser :

http://localhost:5005/webhooks/inspector/inspect.html

The -p flag maps port 5005 inside the container to the same port on the host, making the Rasa server accessible.

Rasa Inspector is a valuable tool within the Rasa framework, designed to help developers and conversational AI designers understand and refine their chatbots. It provides a user-friendly interface to visualize and debug the decision-making process of a Rasa model. By using Rasa Inspector, you can see how your bot interprets user inputs, which intents are detected, and how the conversation flows based on your training data. This tool is particularly useful for identifying and resolving issues with intent classification, entity extraction, and dialogue management.

Running model

To enable the chatbot to use sockets, we need to configure it accordingly. Start by opening the credentials.yml file and uncommenting the socketio snippet. Then, set the values as shown in the following code block. Once these changes are made, we can run your Rasa model.

socketio:

user_message_evt: user_uttered

bot_message_evt: bot_uttered

session_persistence: false

The -m models flag specifies the directory containing the trained model. The --enable-api flag enables the Rasa API, allowing external applications to interact with the chatbot. The --cors "*" flag enables cross-origin resource sharing (CORS) to handle requests from different domains. The --debug flag enables debug mode for enhanced logging and troubleshooting.

docker run -d -p 5005:5005 -v ./:/app \

-e RASA_PRO_LICENSE=${RASA_PRO_LICENSE} \

-e OPENAI_API_KEY=${OPENAI_API_KEY} \

europe-west3-docker.pkg.dev/rasa-releases/rasa-pro/rasa-pro:3.9.3 \

run -m models --enable-api --cors "*" --debug

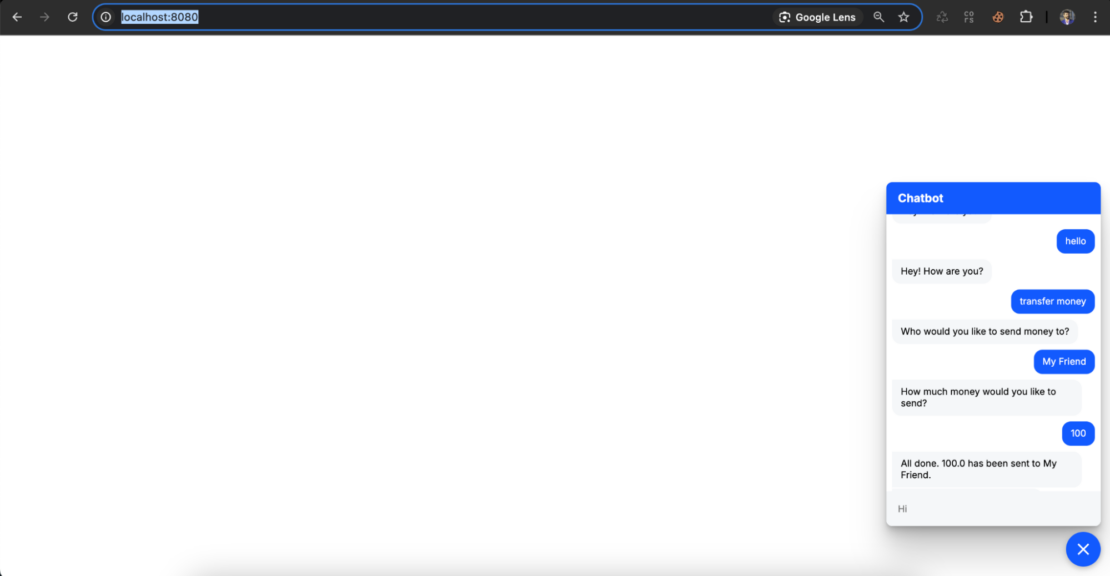

Creating bot WebUI

docker run -p 8080:80 harshmanvar/docker-ml-faq-rasa:webchat

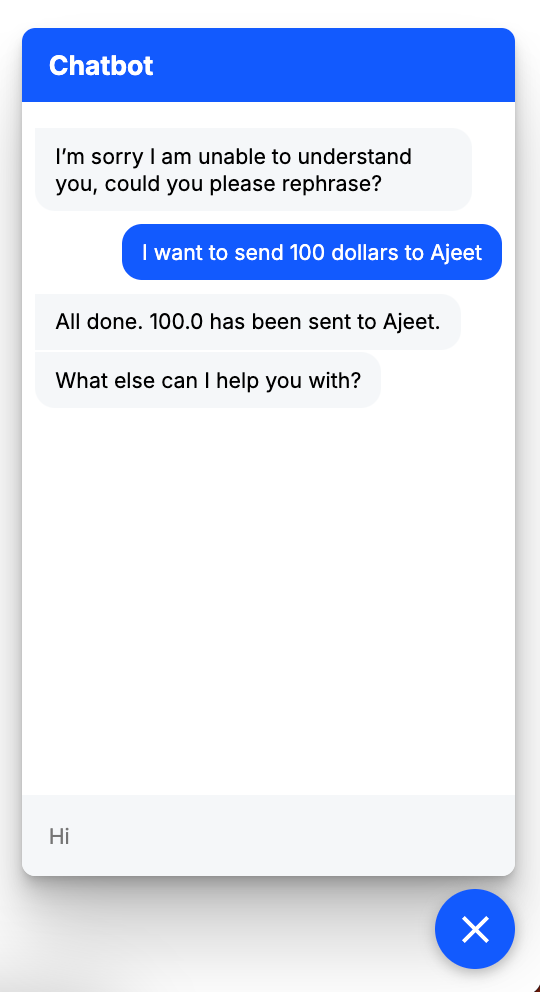

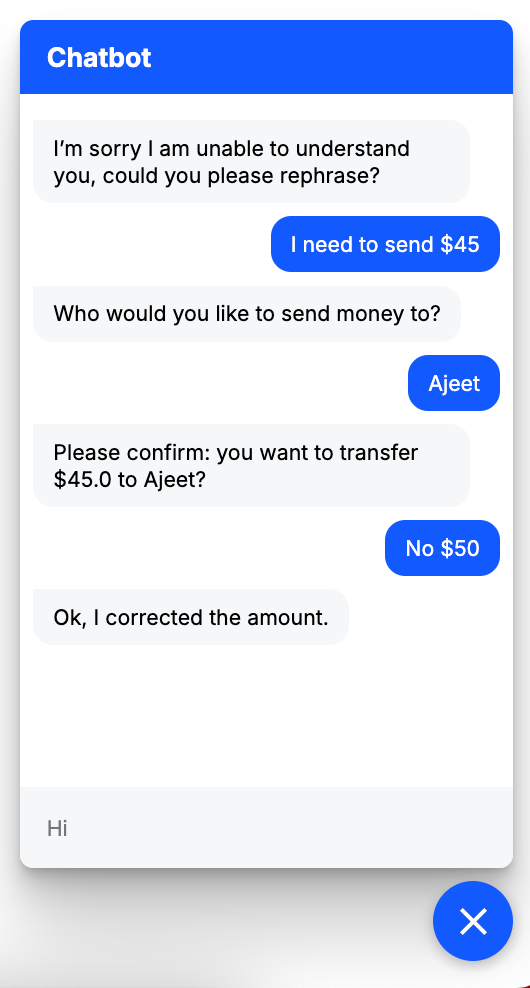

Open http://localhost:8080 in the browser (Figure 7).

Chatbot’s behavior

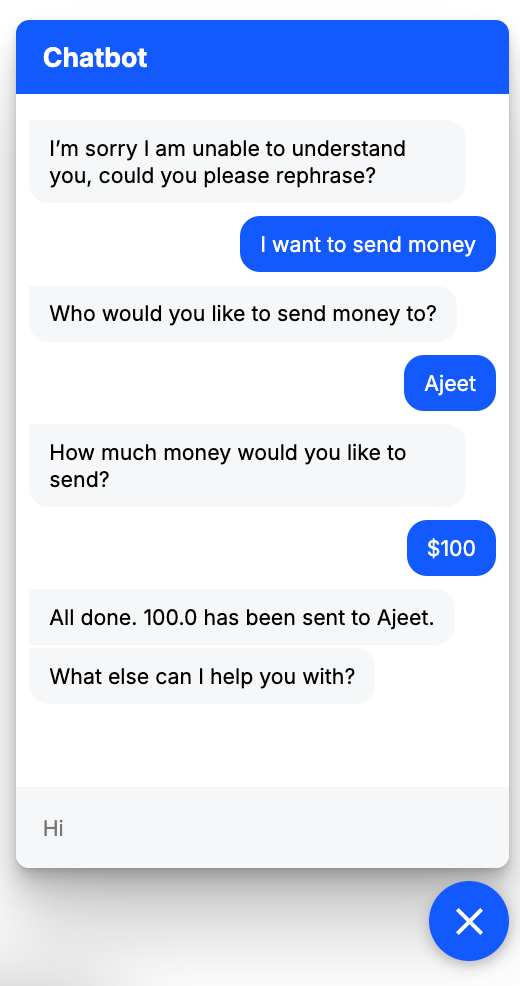

In the first “happy path” scenario, the chatbot guides us step by step, asking how much we want to send before completing the transfer. Figure 8 shows this interaction.

The second scenario, “all at once,” shows how I provide all the details — friend’s name, amount, and everything — in a single message. Figure 9 shows how the chatbot processes this efficiently.

The “change in mind” scenario shows how the chatbot adapts when we switch request mid-conversation (Figure 10).

Visit the Rasa documentation to learn more about comprehending the usage and flow of various files.

Defining services using a Compose file

Here’s how our services appear within a Docker Compose file:

Our sample application has the following parts:

- The rasa service is based on the

rasa-pro/rasa-pro:3.9.3image. - It exposes port

5005to communicate with the Rasa API. - The current directory (

./) is mounted as a volume inside the container, allowing the Rasa project files to be accessible. - The command r

un -m models --enable-api --cors "*" --debugstarts the Rasa server with the specified options. - The webchat service builds the image using the Dockerfile-webchat file located in the current context (

.).Port 8080on the host is mapped to port 80 inside the container to access the webchat interface.

You can clone the repository or download the docker-compose.yml file directly from GitHub.

Bringing up the container services

You can start the WebChat application using Docker Compose. Update environment variables (RASA_PRO_LICENSE, OPENAI_API_KEY) in the docker-compose.yaml file and run the docker compose up -d --build command.

Then, use the docker compose ps command to confirm that your stack is running properly.

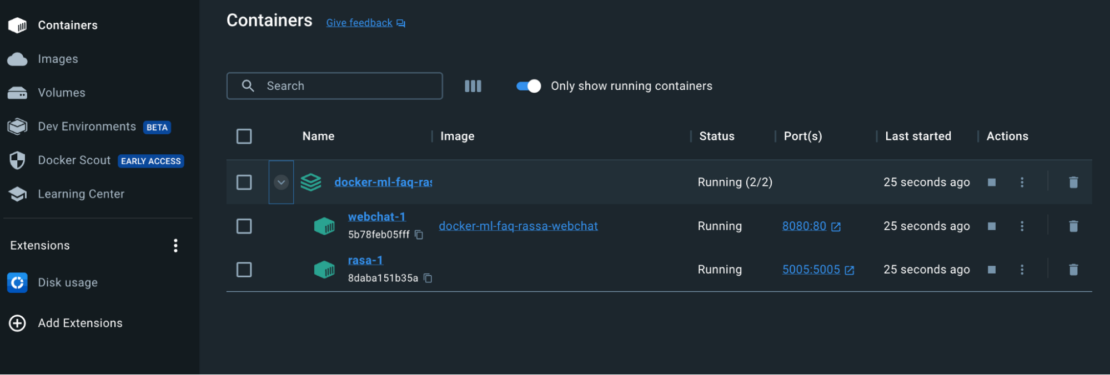

Viewing the containers via Docker Dashboard

You can also leverage the Docker Dashboard to view your container’s ID and easily access or manage your application (Figure 11):

Conclusion

Congratulations! You’ve learned how to containerize a Rasa application with Docker. With a single YAML file, we’ve demonstrated how Docker Compose helps you quickly build and deploy an ML Demo Model app in seconds. With just a few extra steps, you can apply this tutorial while building applications with even greater complexity. Happy developing!

Learn more

- Read the Docker Labs GenAI series.

- Subscribe to the Docker Newsletter.

- Get the latest release of Docker Desktop.

- Have questions? The Docker community is here to help.

- New to Docker? Get started.