Docker is used by millions of developers to optimize the setup and deployment of development environments and application stacks. As artificial intelligence (AI) and machine learning (ML) are becoming key components of many applications, the core benefits of Docker are now doing more heavy lifting and accelerating the development cycle.

Gartner predicts that “by 2027, over 90% of new software applications that are developed in the business will contain ML models or services as enterprises utilize the massive amounts of data available to the business.”

This article, written by our partner DataStax, outlines how Kaskada, open source, and Docker are helping developers optimize their AI/ML efforts.

Introduction

As a data scientist or machine learning practitioner, your work is all about experimentation. You start with a hunch about the story your data will tell, but often you’ll only find an answer after false starts and failed experiments. The faster you can iterate and try things, the faster you’ll get to answers. In many cases, the insights gained from solving one problem are applicable to other related problems. Experimentation can lead to results much faster when you’re able to build on the prior work of your colleagues.

But there are roadblocks to this kind of collaboration. Without the right tools, data scientists waste time managing code dependencies, resolving version conflicts, and repeatedly going through complex installation processes. Building on the work of colleagues can be hard due to incompatible environments — the dreaded “it works for me” syndrome.

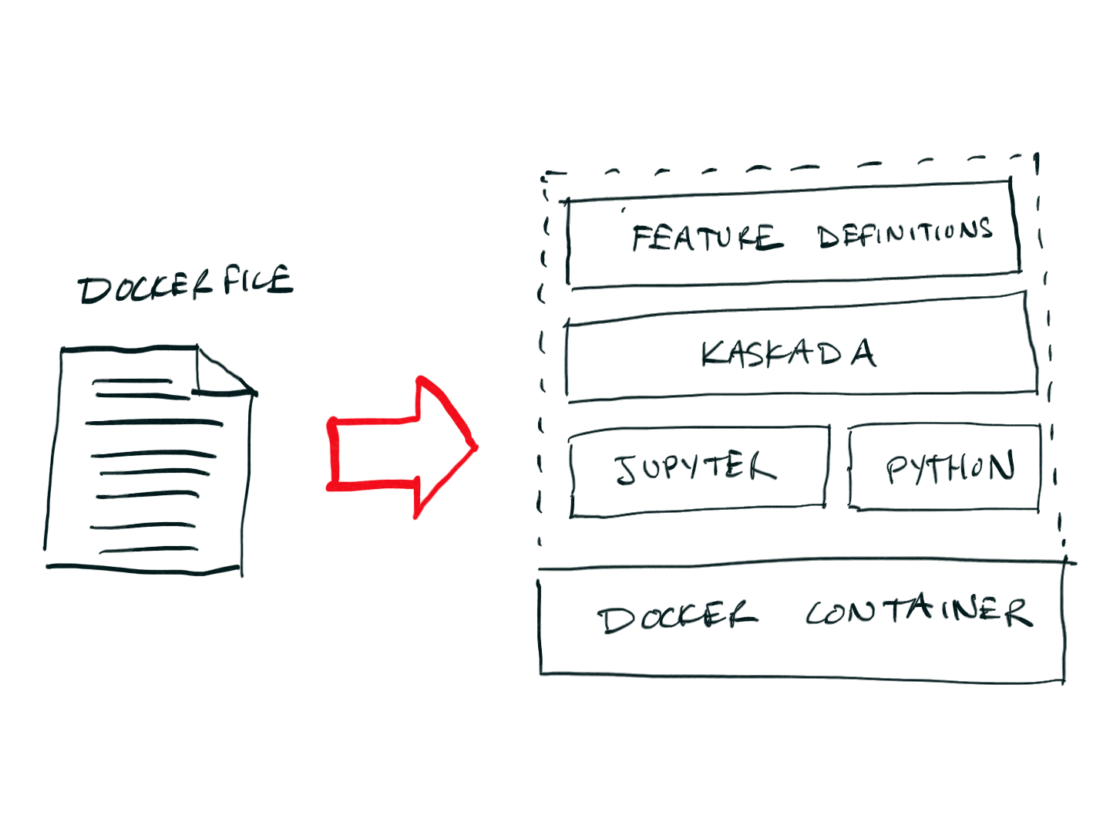

Enter Docker and Kaskada, which offer a similar solution to these different problems: a declarative language designed specifically for the problem at hand and an ecosystem of supporting tools (Figure 1).

For Docker, the Dockerfile format describes the exact steps needed to build a reproducible development environment and an ecosystem of tools for working with containers (Docker Hub, Docker Desktop, Kubernetes, etc.). With Docker, data scientists can package their code and dependencies into an image that can run as a container on any machine, eliminating the need for complex installation processes and ensuring that colleagues can work with the exact same development environment.

With Kaskada, data scientists can compute and share features as code and use those throughout the ML lifecycle — from training models locally to maintaining real-time features in production. The computations required to produce these datasets are often complex and expensive because standard tools like Spark have difficulty reconstructing the temporal context required for training real-time ML models.

Kaskada solves this problem by providing a way to compute features — especially those that require reasoning about time — and sharing feature definitions as code. This approach allows data scientists to collaborate with each other and with machine learning engineers on feature engineering and reuse code across projects. Increased reproducibility dramatically speeds cycle times to get models into production, increases model accuracy, and ultimately improves machine learning results.

Example walkthrough

Let’s see how Docker and Kaskada improve the machine learning lifecycle by walking through a simplified example. Imagine you’re trying to build a real-time model for a mobile game and want to predict an outcome, for example, whether a user will pay for an upgrade.

Setting up your experimentation environment

To begin, start a Docker container that comes preinstalled with Jupyter and Kaskada:

1 2 | docker run --rm -p 8888:8888 kaskadaio/jupyteropen <jupyter URL from logs> |

This step instantly gives you a reproducible development environment to work in, but you might want to customize this environment. Additional development tools can be added by creating a new Dockerfile using this image as the “base image”:

1 2 3 4 5 | # DockerfileFROM kaskadaio/jupyterCOPY requirements.txtRUN pip install -r requirements.txt |

In this example, you started with Jupyter and Kaskada, copied over a requirements file and installed all the dependencies in it. You now have a new Docker image that you can use as a data science workbench and share across your organization: Anyone in your organization with this Dockerfile can reproduce the same environment you’re using by building and running your Dockerfile.

1 2 | docker build -t experimentation_env .docker run --rm -p 8888:8888 experimentation_env |

The power of Docker comes from the fact that you’ve created a file that describes your environment and now you can share this file with others.

Training your model

Inside a new Jupyter notebook, you can begin the process of exploring solutions to the problem — predicting purchase behavior. To begin, you’ll create tables to organize the different types of events produced by the imaginary game.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | % pip install kaskada% load_ext fenlmagicsession = LocalBuilder().build()table.create_table(table_name = "GamePlay",time_column_name = "timestamp",entity_key_column_name = "user_id",)table.create_table(table_name = "Purchase",time_column_name = "timestamp",entity_key_column_name = "user_id",)table.load(table_name = "GamePlay", file = "historical_game_play_events.parquet",)table.load(table_name = "Purchase", file = "historical_purchase_events.parquet",) |

Kaskada is easy to install and use locally. After installing, you’re ready to start creating tables and loading event data into them. Kaskada’s vectorized engine is built for high-performance local execution, and, in many cases, you can start experimenting on your data locally, without the complexity of managing distributed compute clusters.

Kaskada’s query language was designed to make it easy for data scientists to build features and training examples directly from raw event data. A single query can replace complex ETL and pre-aggregation pipelines, and Kaskda’s unique temporal operations unlock native time travel for building training examples “as of” important times in the past.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 | %% fenl --var training# Create views derived from the source tableslet GameVictory = GamePlay | when(GamePlay.won)let GameDefeat = GamePlay | when(not GamePlay.won)# Compute some features as inputs to our modellet features = { loss_duration: sum(GameVictory.duration), purchase_count: count(Purchase),}# Observe our features at the time of a player's second victorylet example = features | when(count(GameDefeat, window=since(GameVictory)) == 2) | shift_by(hours(1))# Compute a target value# In this case comparing purchase count at prediction and label timelet target = count(Purchase) > example.purchase_count# Combine feature and target values computed at the different timesin extend(example, {target}) |

In the following example, you first apply filtering to the events, build simple features, observe them at the points in time when your model will be used to make predictions, then combine the features with the value you want to predict, computed an hour later. Kaskada lets you describe all these operations “from scratch,” starting with raw events and ending with an ML training dataset.

1 2 3 4 5 6 7 8 9 10 11 | from sklearn.linear_model import LogisticRegressionfrom sklearn import preprocessingx = training.dataframe[['loss_duration']]y = training.dataframe['target']scaler = preprocessing.StandardScaler().fit(X)X_scaled = scaler.transform(X)model = LogisticRegression(max_iter=1000)model.fit(X_scaled, y) |

Kaskada’s query language makes it easy to write an end-to-end transformation from raw events to a training dataset.

Conclusion

Docker and Kaskada enable data scientists and ML engineers to solve real-time ML problems quickly and reproducibly. With Docker, you can manage your development environment with ease, ensuring that your code runs the same way on every machine. With Kaskada, you can collaborate with colleagues on feature engineering and reuse queries as code across projects. Whether you’re working independently or as part of a team, these tools can help you get answers faster and more efficiently than ever before.

Get started with Kaskada’s official images on Docker Hub.