There are over one million Dockerfiles on GitHub today, but not all Dockerfiles are created equally. Efficiency is critical, and this blog series will cover five areas for Dockerfile best practices to help you write better Dockerfiles: incremental build time, image size, maintainability, security and repeatability. If you’re just beginning with Docker, this first blog post is for you! The next posts in the series will be more advanced.

Important note: the tips below follow the journey of ever-improving Dockerfiles for an example Java project based on Maven. The last Dockerfile is thus the recommended Dockerfile, while all intermediate ones are there only to illustrate specific best practices.

Incremental build time

In a development cycle, when building a Docker image, making code changes, then rebuilding, it is important to leverage caching. Caching helps to avoid running build steps again when they don’t need to.

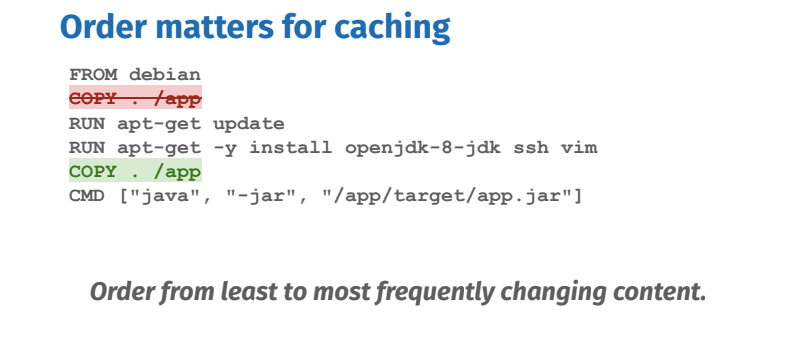

Tip #1: Order matters for caching

However, the order of the build steps (Dockerfile instructions) matters, because when a step’s cache is invalidated by changing files or modifying lines in the Dockerfile, subsequent steps of their cache will break. Order your steps from least to most frequently changing steps to optimize caching.

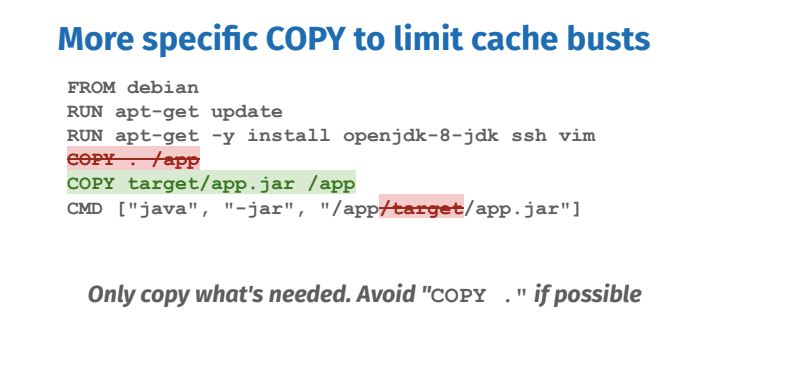

Tip #2: More specific COPY to limit cache busts

Only copy what’s needed. If possible, avoid “COPY .” When copying files into your image, make sure you are very specific about what you want to copy. Any changes to the files being copied will break the cache. In the example above, only the pre-built jar application is needed inside the image, so only copy that. That way unrelated file changes will not affect the cache.

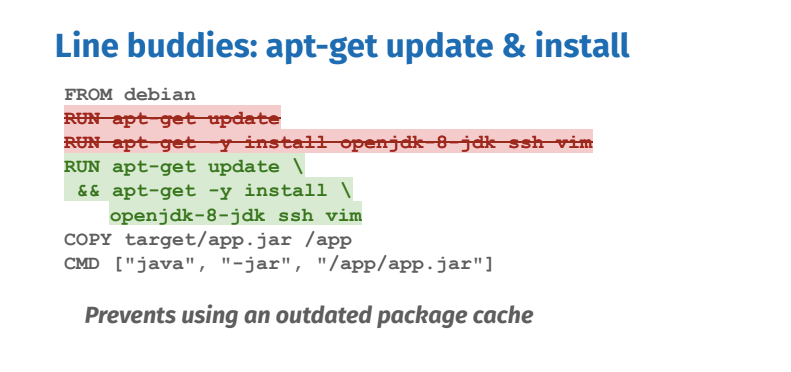

Tip #3: Identify cacheable units such as apt-get update & install

Each RUN instruction can be seen as a cacheable unit of execution. Too many of them can be unnecessary, while chaining all commands into one RUN instruction can bust the cache easily, hurting the development cycle. When installing packages from package managers, you always want to update the index and install packages in the same RUN: they form together one cacheable unit. Otherwise you risk installing outdated packages.

Reduce Image size

Image size can be important because smaller images equal faster deployments and a smaller attack surface.

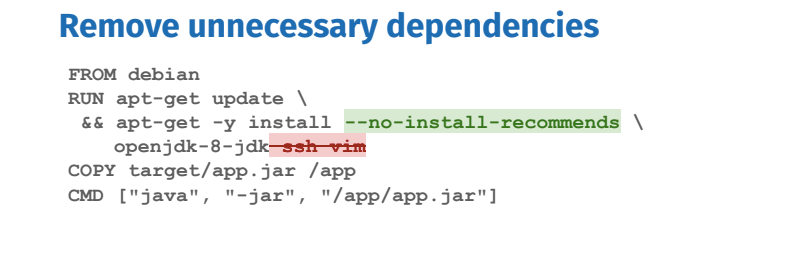

Tip #4: Remove unnecessary dependencies

Remove unnecessary dependencies and do not install debugging tools. If needed debugging tools can always be installed later. Certain package managers such as apt, automatically install packages that are recommended by the user-specified package, unnecessarily increasing the footprint. Apt has the –no-install-recommends flag which ensures that dependencies that were not actually needed are not installed. If they are needed, add them explicitly.

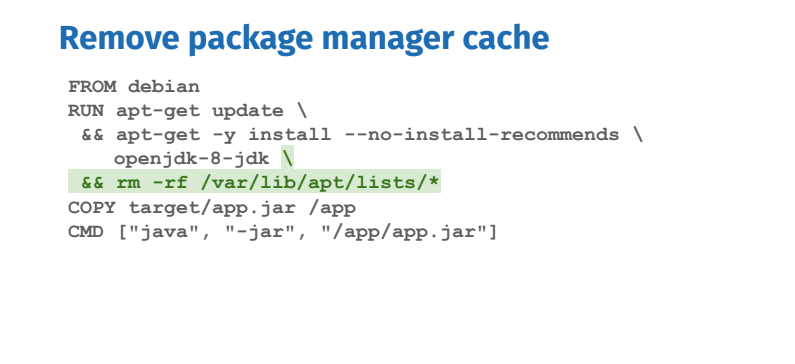

Tip #5: Remove package manager cache

Package managers maintain their own cache which may end up in the image. One way to deal with it is to remove the cache in the same RUN instruction that installed packages. Removing it in another RUN instruction would not reduce the image size.

There are further ways to reduce image size such as multi-stage builds which will be covered at the end of this blog post. The next set of best practices will look at how we can optimize for maintainability, security, and repeatability of the Dockerfile.

Maintainability

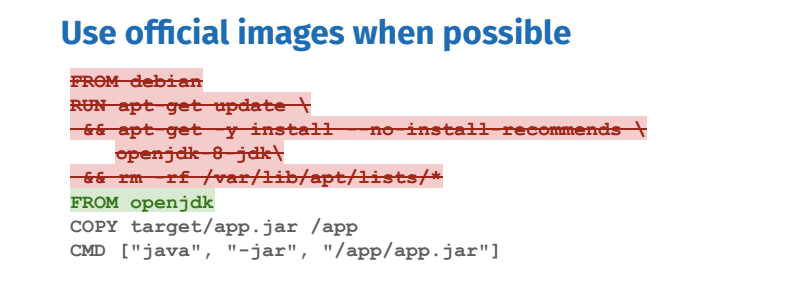

Tip #6: Use official images when possible

Official images can save a lot of time spent on maintenance because all the installation steps are done and best practices are applied. If you have multiple projects, they can share those layers because they use exactly the same base image.

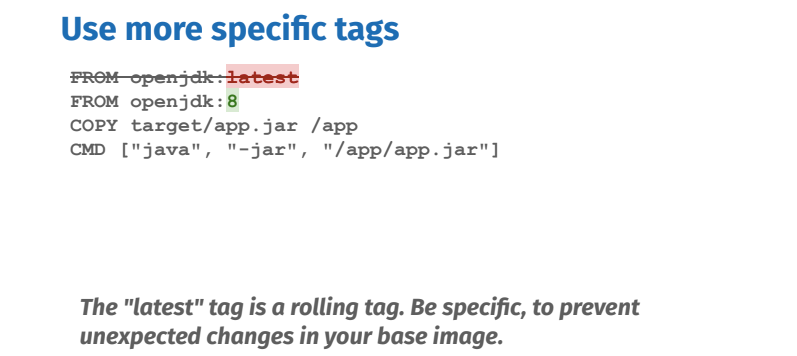

Tip #7: Use more specific tags

Do not use the latest tag. It has the convenience of always being available for official images on Docker Hub but there can be breaking changes over time. Depending on how far apart in time you rebuild the Dockerfile without cache, you may have failing builds.

Instead, use more specific tags for your base images. In this case, we’re using openjdk. There are a lot more tags available so check out the Docker Hub documentation for that image which lists all the existing variants.

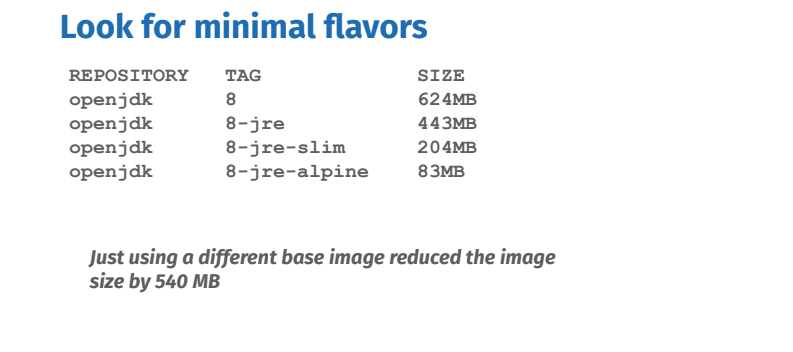

Tip #8: Look for minimal flavors

Some of those tags have minimal flavors which means they are even smaller images. The slim variant is based on a stripped down Debian, while the alpine variant is based on the even smaller Alpine Linux distribution image. A notable difference is that debian still uses GNU libc while alpine uses musl libc which, although much smaller, may in some cases cause compatibility issues. In the case of openjdk, the jre flavor only contains the java runtime, not the sdk; this also drastically reduces the image size.

Reproducibility

So far the Dockerfiles above have assumed that your jar artifact was built on the host. This is not ideal because you lose the benefits of the consistent environment provided by containers. For instance if your Java application depends on specific libraries it may introduce unwelcome inconsistencies depending on which computer the application is built.

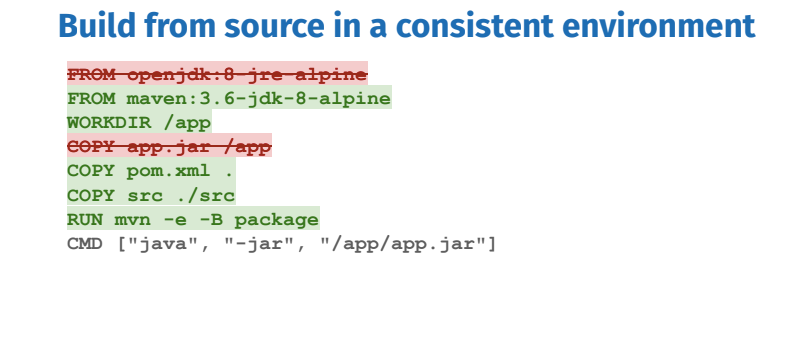

Tip #9: Build from source in a consistent environment

The source code is the source of truth from which you want to build a Docker image. The Dockerfile is simply the blueprint.

You should start by identifying all that’s needed to build your application. Our simple Java application requires Maven and the JDK, so let’s base our Dockerfile off of a specific minimal official maven image from Docker Hub, that includes the JDK. If you needed to install more dependencies, you could do so in a RUN step.

The pom.xml and src folders are copied in as they are needed for the final RUN step that produces the app.jar application with mvn package. (The -e flag is to show errors and -B to run in non-interactive aka “batch” mode).

We solved the inconsistent environment problem, but introduced another one: every time the code is changed, all the dependencies described in pom.xml are fetched. Hence the next tip.

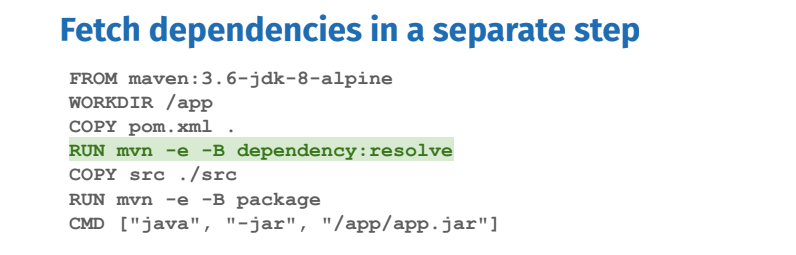

Tip #10: Fetch dependencies in a separate step

By again thinking in terms of cacheable units of execution, we can decide that fetching dependencies is a separate cacheable unit that only needs to depend on changes to pom.xml and not the source code. The RUN step between the two COPY steps tells Maven to only fetch the dependencies.

There is one more problem that got introduced by building in consistent environments: our image is way bigger than before because it includes all the build-time dependencies that are not needed at runtime.

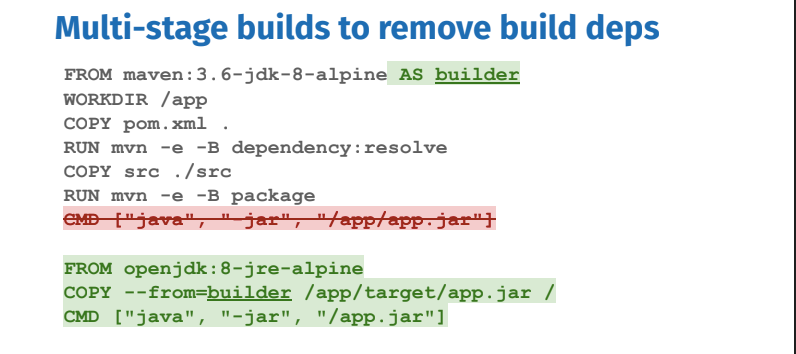

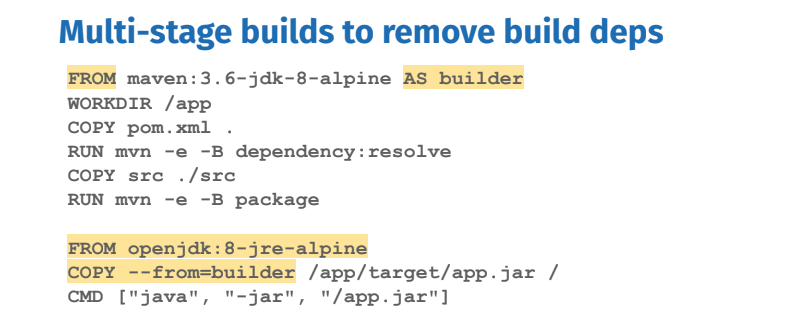

Tip #11: Use multi-stage builds to remove build dependencies (recommended Dockerfile)

Multi-stage builds are recognizable by the multiple FROM statements. Each FROM starts a new stage. They can be named with the AS keyword which we use to name our first stage “builder” to be referenced later. It will include all our build dependencies in a consistent environment.

The second stage is our final stage which will result in the final image. It will include the strict necessary for the runtime, in this case a minimal JRE (Java Runtime) based on Alpine. The intermediary builder stage will be cached but not present in the final image. In order to get build artifacts into our final image, use COPY --from=STAGE_NAME. In this case, STAGE_NAME is builder.

Multi-stage builds is the go-to solution to remove build-time dependencies.

We went from building bloated images inconsistently to building minimal images in a consistent environment while being cache-friendly. In the next blog post, we will dive more into other uses of multi-stage builds.

Additional Resources: