Guest post written by BuildKit maintainer Erik Sipsma.

The Big Picture

- MergeOp and DiffOp are two new features released in BuildKit v0.10. These operations let you assemble container images by composing filesystems (MergeOp) and splitting them apart (DiffOp), all while minimizing the creation of duplicated data both locally on disk and in remote registries.

- Minimizing data duplication enhances cache reusability, which can offer a significant performance boost to many workflows. One early adopter, Netflix, reported that highly complex builds — which previously took over an hour — now take only three minutes after updating their internal tooling to use MergeOp instead of copies.

- Additionally, these new operations make the workflows you’d find in a package manager, where software dependencies are structured in a complex DAG, much more performant and easily expressible as BuildKit builds.

- MergeOp and DiffOp, much like other BuildKit features, are low-level primitives meant for higher-level tooling and interfaces. Though they’re quite new, support for them already exists in:

-

- The new `COPY –link` flag supported by Dockerfile, as described in this previous blog post, which uses MergeOp underneath the hood

- HLB and Dagger, which both now support Merge+Diff operations

This post showcases how MergeOp and DiffOp help encode knowledge of software dependency relationships, which enables BuildKit to maximize cache reusability and unlocks some interesting new use cases.

BuildKit Crash Course

Before diving into new features, let’s take a quick crash course on BuildKit and LLB.

Filesystem DAG Solver

BuildKit is fundamentally a Directed Acyclic Graph (DAG) solver where each vertex in the DAG represents an operation performed on a set of one or more filesystems. Each vertex operation can accept as input the filesystems of other vertices, and then output one or more filesystems of its own. These inputs and outputs create the edges of the DAG.

When solving a DAG, BuildKit uses caching, parallelization, and other optimizations to calculate the filesystems output by the “target” vertex of the solve as efficiently as possible. You can optionally export your target vertex’s result in different ways: such as a container image pushed to a registry, or as a locally-created directory on the client’s machine.

LLB DAGs

DAGs are provided to BuildKit in the form of LLB, which is a low-level language designed as something that higher-level build specifications can be “compiled” to. This mirrors how many different programming languages are compilable to a single target like assembly language. A few examples of tools and languages that compile to LLB are Dockerfile, HLB, Earthfile, and Dagger (which converts Cue to LLB).

Crucially, this lets BuildKit focus on generic features or optimizations like caching and exporting. Higher-level tools can leverage these mechanisms instead of reinventing the wheel.

Example: Dockerfile to LLB DAG

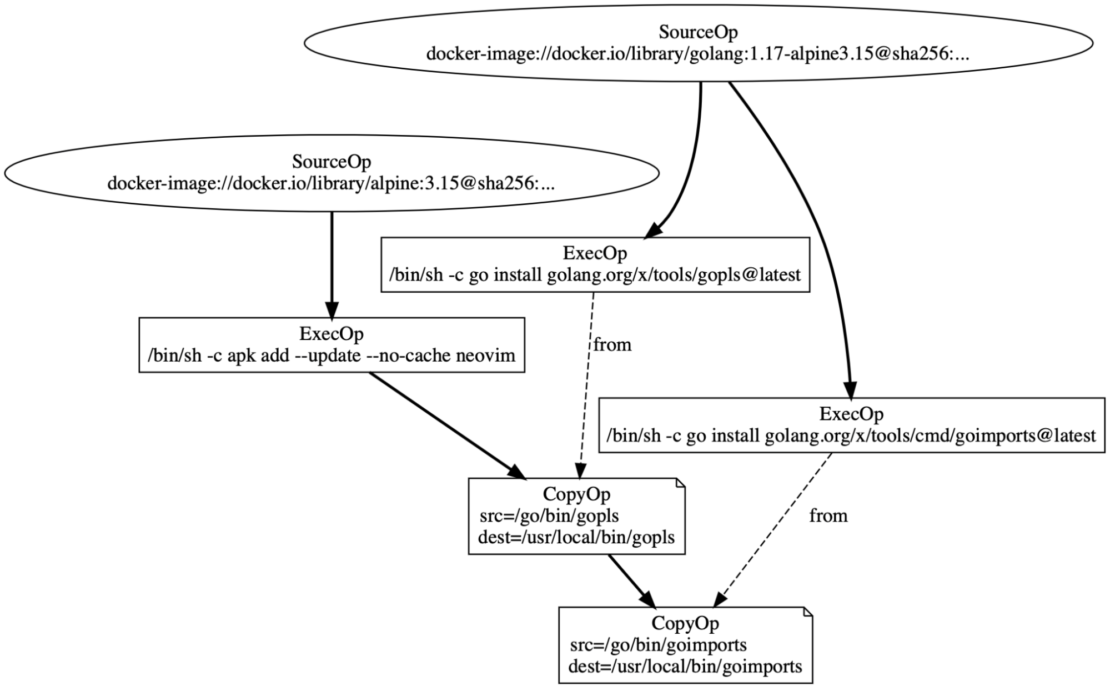

First, consider the following Dockerfile:

FROM golang:1.17-alpine3.15 as gopls RUN go install golang.org/x/tools/gopls@latest FROM golang:1.17-alpine3.15 as goimports RUN go install golang.org/x/tools/cmd/goimports@latest FROM alpine:3.15 RUN apk add --update --no-cache neovim COPY --from=gopls /go/bin/gopls /usr/local/bin/gopls COPY --from=goimports /go/bin/goimports /usr/local/bin/goimports

We can take this and easily compile it into an LLB DAG:

Here, you can see a few different types of vertices, with each type representing a different kind of operation to create or modify a filesystem:

- SourceOp vertices import a new filesystem from external sources like container images, Git repositories, or others. They have no inputs and usually form the “roots” of an LLB graph.

- ExecOp vertices run a command atop an input filesystem and output the filesystem plus any changes made. They may also accept multiple input filesystems mounted at different locations, also letting them output each modified filesystem separately.

- CopyOp vertices output a filesystem where specified sets of files and directories — from one input vertex (the source) — have been copied atop another input vertex (the destination). Technically, copying is a subtype of general FileOp vertices. It supports a number of different basic file operations, but we refer to it as CopyOp here for simplicity.

Key Takeaways

Here’s what we’ve learned so far:

- BuildKit solves DAGs of filesystem operations

- BuildKit supports exporting the results of those solves in a variety of formats, including container images and more

- Through LLB, BuildKit can be a foundation for a wide variety of current higher level build-specification languages

The Problem: Linear Copy Chains

While a number of build systems and languages have been compiled to LLB with great success, one class of systems was — until Merge+Diff — difficult to support as efficiently as needed.

Surprisingly, this class of systems makes heavy use of DAGs to represent software dependencies. This includes users who are building software and libraries with many overlapping, transitive dependencies. Additional examples include package managers used by Linux distros and programming languages.

Superficially, it’s odd that BuildKit had any trouble modeling software-dependency DAGs since it uses DAGs to represent builds. Let’s explore an example to see what those issues were.

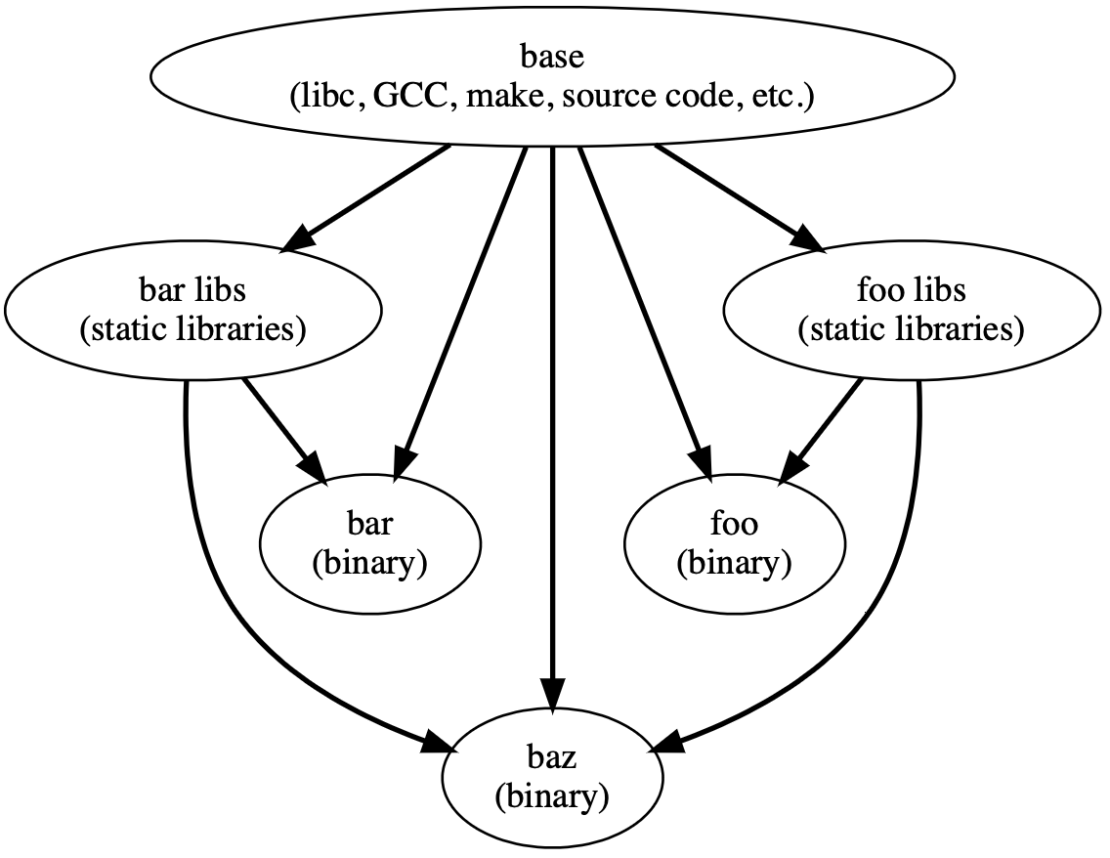

The example revolves around building software from source with the following dependency DAG:

Note that this DAG is not LLB yet. This dependency DAG just shows what you need to build each vertex’s software, where each edge is a build-time dependency. For example, the bar binary only needs base and the bar libs to build, but the baz binary needs base, bar, and foo libs.

We want to convert this into an LLB DAG that builds the foo, bar, and baz binaries — and then exports that build as a container image. We want our container image to only contain those binaries — not build time-only dependencies like the static library files.

We’re taking a few shortcuts for simplicity’s sake. Accordingly, we’re bundling multiple dependencies like libc, GCC, and source code inside base while combining groups of static libraries into single vertices. In reality, you can divide those dependencies between separate vertices at the expense of extra complexity.

Building DAGs with Copy Chains

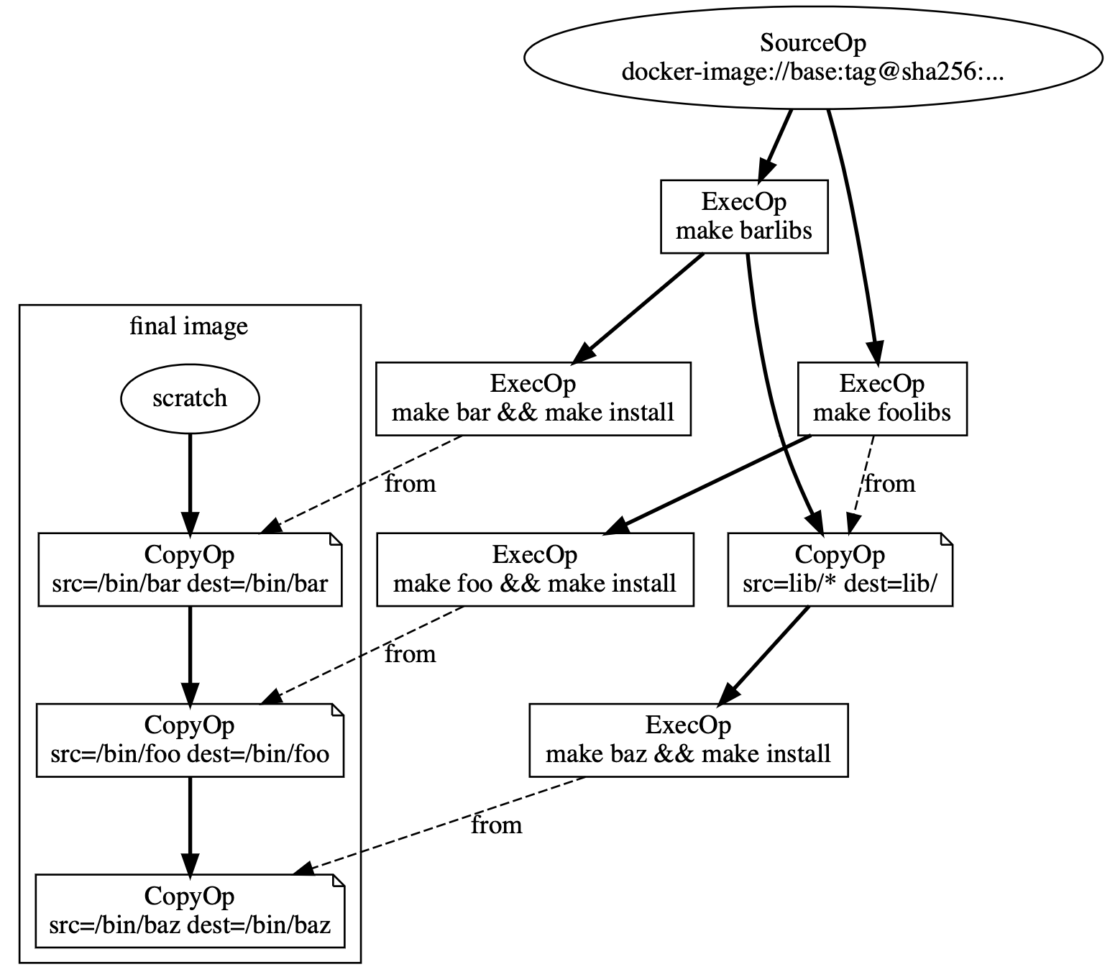

We’ll start by converting each target vertex (foo, bar, and baz) into LLB individually before combining them all together. Thankfully, foo and bar have simple dependency structures:

The final CopyOp in each graph isolates /bin/foo and /bin/bar onto scratch, which is just an empty filesystem. Conversely, baz is a bit more involved:

Here, we split the foo and bar libs builds into separate steps, since they share no dependencies. This lets BuildKit parallelize the build steps for each and cache the results separately.

The results of those ExecOps are then combined into a single build dependency closure via CopyOp, which creates a filesystem where the lib/ directory contains both foo and bar libs.

Finally, let’s combine everything into one LLB graph:

Now, say you submit your LLB to BuildKit for a solve and want to export the result as a registry container image. The final chain of CopyOps becomes the layers of the exported image. This is pushed to the registry alongside an image manifest.

If you run the solve again with the same BuildKit instance, you’ll find that — thanks to BuildKit’s caching features — no actual work is required:

- During the solve and before running each vertex, BuildKit checks to see if any vertex inputs have recently changed. If not, BuildKit uses cached results and avoids a rerun.

- When exporting the result as a container image, BuildKit only needs to push those layers to the registry that aren’t already present. Since the registry already contains layers from the previous build, no pushes are needed.

Cascading Cache Invalidation

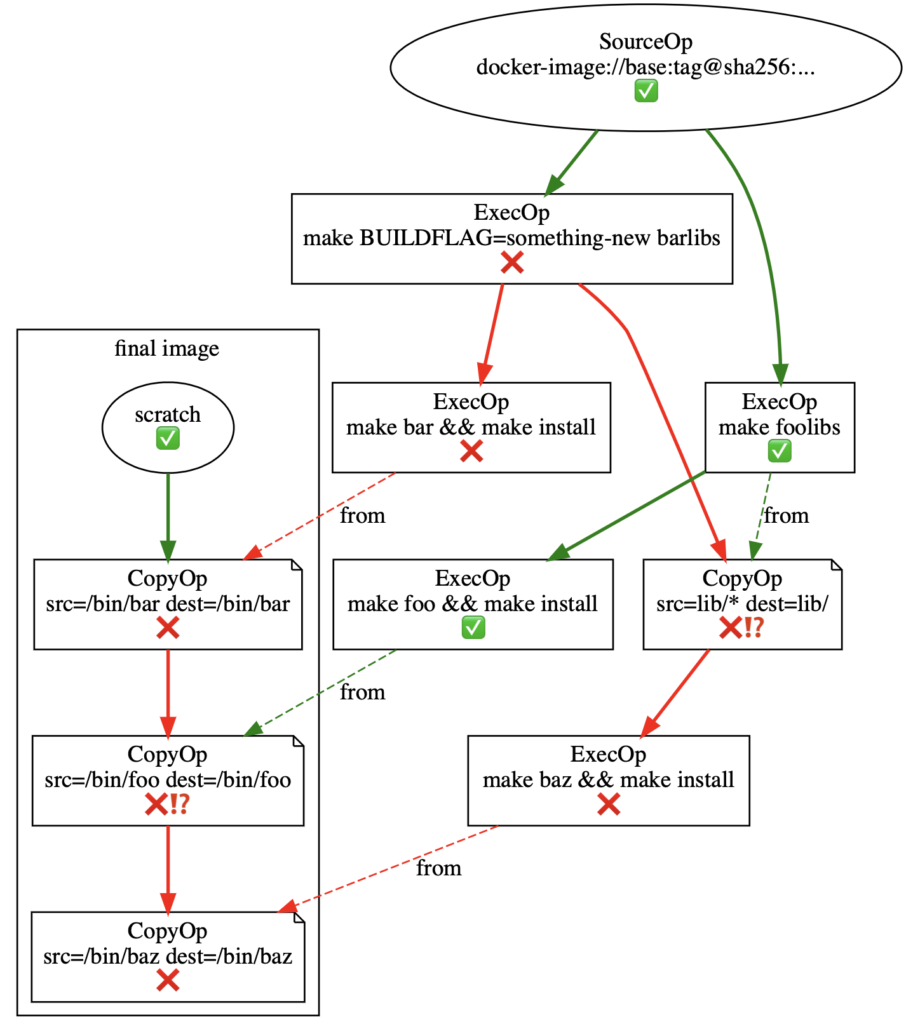

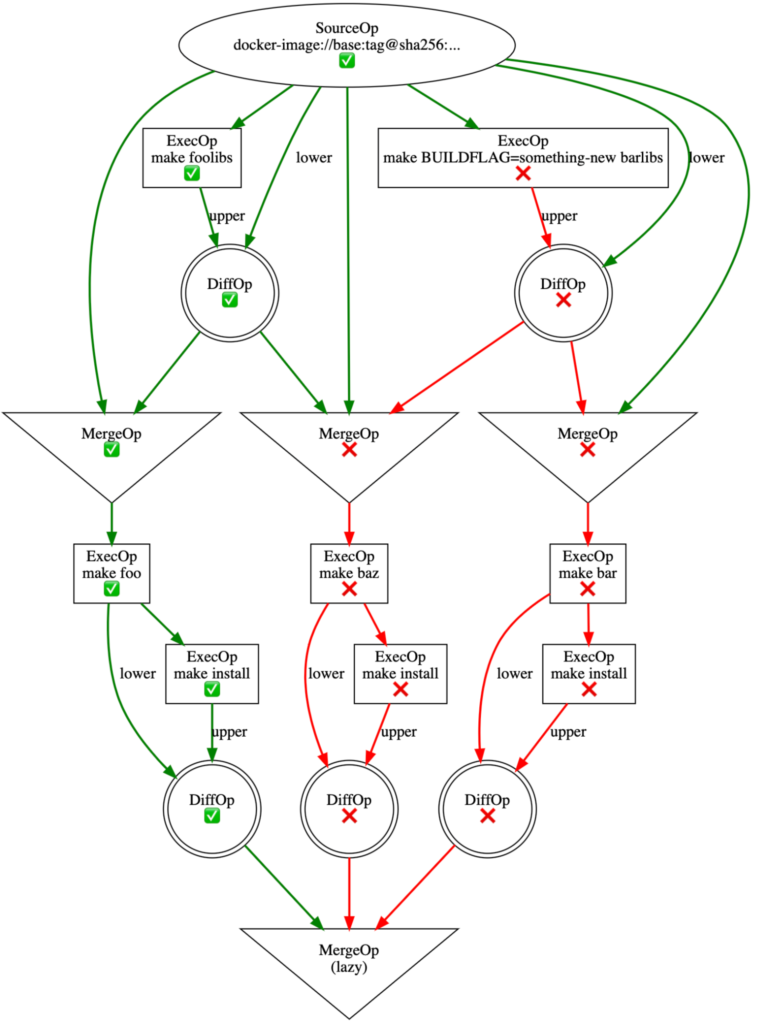

This is all good news, but there are problems. Where do those come from? Let’s consider what happens if you were to rerun the build again — but with a change to the bar libs’ builds. In this case, we’ll assume that you’re building with a new flag.

Again, BuildKit checks to see if any LLB vertex dependencies have changed before grabbing the cached result. Changes invalidate the cache and force a rerun, which cascades to any descendent vertices:

Here, we mark any cache-invalidated vertices with an ❌. Some invalidations here are perfectly logical:

- The ExecOp building the

barlibs can’t be cached because the definition changed, addingBUILDFLAG=something-newto the vertex definition. - The ExecOp which builds

barmust be invalid since its dependency libs are invalid.

Meanwhile, we’ve marked unexpected invalidations in the LLB with ❌⁉️:

- The copy of the

foolibs needed to create the dependency closure under the build of/bin/bazis invalidated. This is because that copy’s destination is atop the build for thebarlibs — creating a cache dependency. This happens even though ourfooandbarlibs don’t actually share any dependencies. The CopyOp must re-run, yet it ultimately creates the same layer as before. - The copy of

/bin/foointo the final image layer is also invalid for similar reasons. The final image is constructed as a linear chain of CopyOps, even though no components share dependencies. Since/bin/foocomes after/bin/barin the chain, those cascading invalidations will impact it.

We could technically fix these niche issues by switching our copying order. However, that’d only shift any bar lib invalidation to your foo libs. These unnecessary invalidations have costs:

- BuildKit must perform the copies locally again, which eats up time and disk space.

- When exporting LLB results as container layers, BuildKit works harder to determine the contents of each layer and recompress those to tarballs. This still happens even though the layers didn’t need to change, and usually end up with the exact same contents as before.

- BuildKit has an optimization that prevents remote registry layers from being pulled locally unless strictly necessary. This minimizes data downloads. It works with image layers referenced by a SourceOp, plus layers referenced via BuildKit’s remote cache import feature. However, its usefulness is hampered when unneeded layers are caught in a cascading cache invalidation. This process can forcefully pull them down as the destination of a CopyOp.

The above issues impact many use cases, yet are often inconsequential since they don’t create a performance bottleneck. Copying small quantities of data isn’t often noticeable. However, our example is fairly basic. Dependency DAGs can get much more complicated than this, and involve many more files larger in both size and quantity. This is where cache invalidation becomes a true bottleneck.

Key Takeaways

We generally use the following approach to build each vertex of a dependency DAG via LLB:

-

- Combine dependencies – Topologically sort the build-time dependency graph and create an LLB vertex that outputs a filesystem containing that closure. CopyOps can be used to combine independent filesystems when needed, which you can see while copying foo libs atop bar libs — for baz builds in the example above.

- Execute Build – Run the vertex build as an ExecOp atop the vertex from step one.

- Package Artifacts – If needed, separate the build artifacts created during step two from the files and directories of the dependency closure. This creates a well-defined, isolated package. Isolation is also possible via a CopyOp, which becomes apparent while copying each binary into the final image in our previous example.

We’ll refer back to these steps later!

The issue with this approach is that too much information is lost in step one when a DAG of software dependencies is linearized into a copy chain. A change to one input invalidates the destination directory for the next input. Each subsequent input then falls victim to that cascading effect. This happens despite the fact that each DAG input should be independent. This creates overly-fragile caches and impairs BuildKit’s ability to maximize filesystem re-use — both during builds and while exporting as container layers.

LLB needs new types of operations that let us compose filesystems while keeping them independent. This is where Merge+Diff comes in handy.

The Solution: Composable Filesystems with Merge+Diff

MergeOp

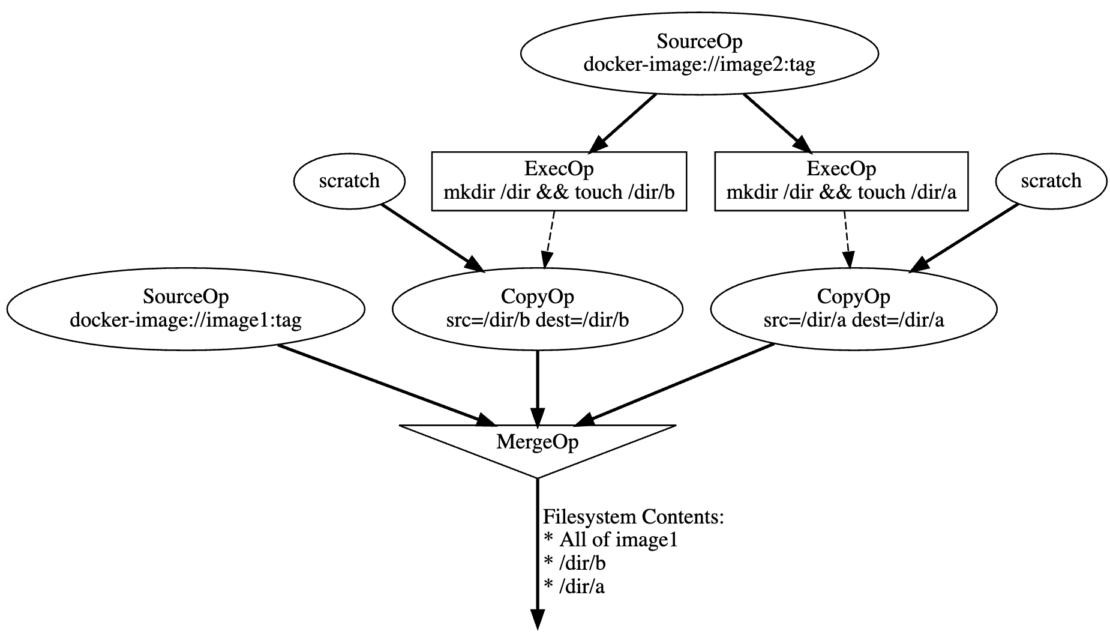

MergeOp is a new type of LLB vertex that lets you efficiently merge filesystems without creating interdependencies. Each vertex input is a filesystem and the output is a filesystem, where each input is applied atop another. Here’s a basic example:

Here, our MergeOp output is annotated with its contents. These are simply the combined contents of each input to the MergeOp. Any overlapping directories have their contents merged. If any two files are located along the same path, the file from the latter-most input takes precedence (the order of the inputs thus matters). This example shows a merger of SourceOp and CopyOp vertices, but MergeOp accepts the result of any LLB vertex.

When building software dependency DAGs, MergeOp is useful during the “Combine Dependencies” step listed previously. It replaces CopyOp and avoids its common pitfalls in this use case.

MergeOp behaves in a few key ways:

- Input Layer Reuse – When a MergeOp’s result is included in a container image, each input’s layers are conjoined rather than recreated or squashed. This enables maximum re-use of previously-exported layers.

- Laziness – MergeOp is implemented lazily, which means that the on-disk representation of the merged filesystem is only created when strictly necessary. Local creation is necessary when this representation is an ExecOp input. It’s not necessary to create a MergeOp result locally when you directly export it as a container image. The full implications of this feature are explored later under “Container Images as Lazy Package Merges”.

- Hardlink Optimizations – When you must create a MergeOp result locally, the on-disk filesystem will hard link files to create the merged tree rather than copying them. This prevents large files from becoming a bottleneck during merges — especially when using filesystems like ext4 that don’t support reflink optimizations.

Hardlinking is only available when using the two leading snapshotter backends: overlay and native. Currently, other backends will create merged filesystems by copying files, preferring to use the copy_file_range syscall when available. However, equivalent optimizations for other snapshotter types like estargz will likely appear in the future.

If you’re wondering why hard links are needed at all for the overlay snapshotter when you could instead combine lowerdirs into an overlay mount, your curiosity is valid. Sadly, this approach doesn’t always work due to opaque directories, as described in corner cases 1 and 2 of this tangentially related Github issue. It may be possible to make it work with more effort, but the hard link approach remains easier for now.

DiffOp

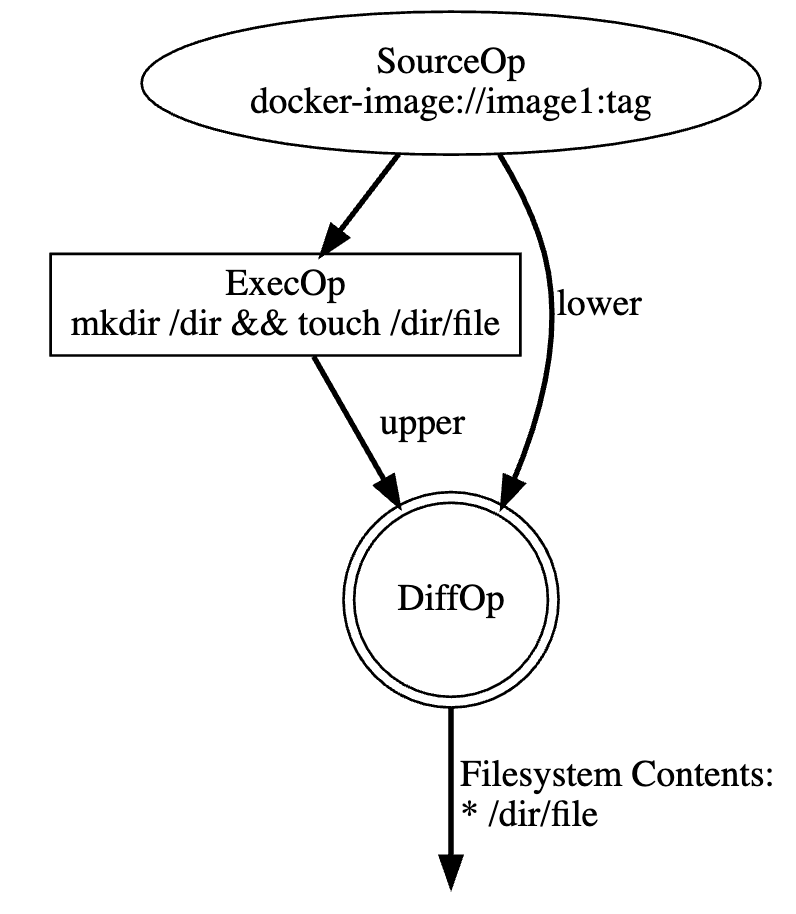

DiffOp is another new type of LLB vertex that lets you efficiently separate a filesystem from its dependency base. It accepts two inputs: a “lower” and an “upper” filesystem. It then outputs the filesystem that separates upper from lower.

You can also view DiffOp as a way to “unmerge” filesystems — something inverse to MergeOp. Here’s a basic example:

You can see that the diff is between the SourceOp base and an ExecOp made atop this base. This essentially detaches the ExecOp from its dependency. The DiffOp’s result only contains any changes made during the ExecOp.

While building software-dependency DAGs, DiffOp is useful during the “Package Artifacts” step listed earlier. It helps isolate files and lets them use their own “package” independent of their original dependencies — without making a copy. However, DiffOp use cases are more obscure than those of MergeOp. BuildKit’s user guides cover these scenarios in greater detail.

DiffOp shares behavioral characteristics with MergeOp:

- Input Layer Reuse – When there’s a “known path” from the lower input to the upper input, a container image export that includes the DiffOp result will just reuse the layers that separate lower and upper. When the path is unknown, DiffOp still outputs a consistent result but cannot re-use input layers.

- Laziness – DiffOp is also implemented lazily; the on-disk filesystem representation is only created when needed. Exporting a DiffOp result as a container image does not require “unlazying” its inputs (except where there’s no known path between lower and upper, as mentioned above).

- Hardlink Optimizations – BuildKit creates DiffOp results locally with the same optimizations (and current caveats) as MergeOp.

Example: Two New LLB Operations

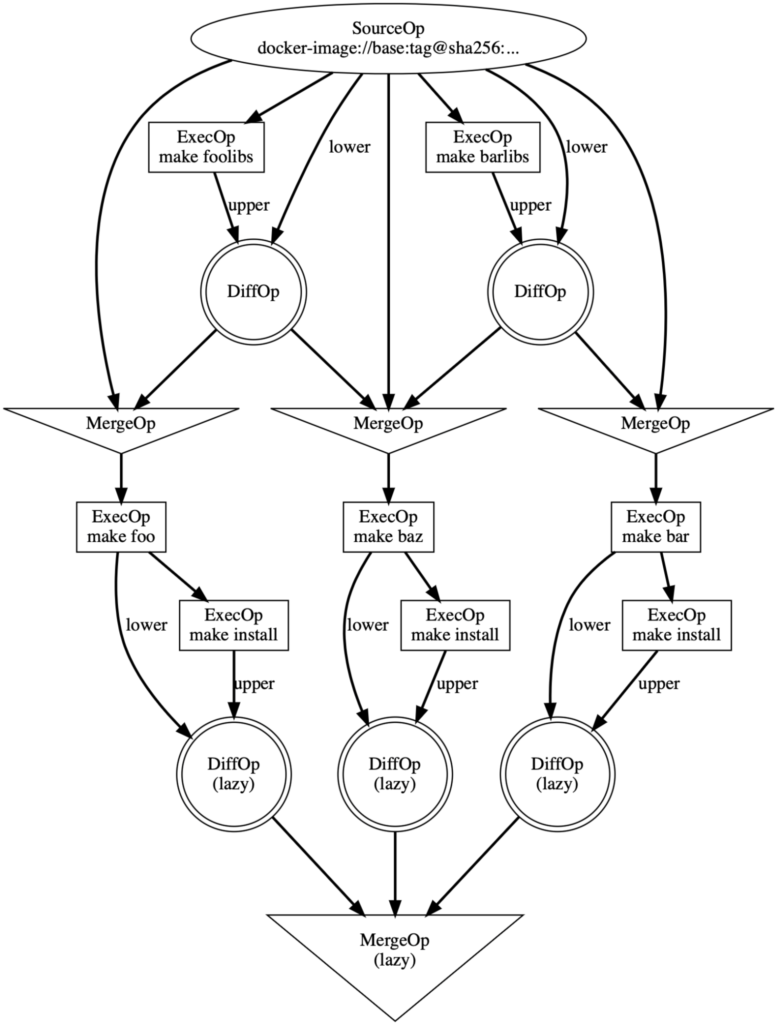

Let’s see how these two new LLB operations can solve our problems from the previous section. Here’s a new-and-improved approach for building a vertex in a dependency DAG using LLB:

-

- Combine dependencies – Plug dependencies into a MergeOp (in topologically-sorted order, if needed).

- Execute Build – Run the build as an ExecOp atop the merged filesystem from step one.

- Package Artifacts – Use DiffOp (or equivalent techniques from the user guide) to extract build artifacts into their own independent filesystems. You can then plug DiffOp’s output into other MergeOps or export it directly.

Using those rules, here’s the updated LLB:

Now, let’s consider the same scenario as before, where we perform an initial build of the above LLB and a subsequent build modified bar libs:

In this instance, only expected invalidations occur. BuildKit performs each build step using only vital dependencies. Cache invalidations only cascade to vertices to which they should. There are longer any copies of foo libs or foo that invalidate unnecessarily.

We owe this to the fact that invalidation of one MergeOp input doesn’t invalidate others, avoiding issues with linearized copy chains. While a MergeOp as a whole is invalidated when one input is invalidated, the merged filesystem is only created when strictly necessary.

The MergeOps for each bar and baz dependency closures must be created on-disk since ExecOps is running atop them. Luckily, hardlinks make this process efficient — provided that a supported snapshotter backend is in use.

The final MergeOp will not be created on-disk. This — in combination with DiffOp’s lazy implementation — means that the exported image will only consist of the layers created by the make install ExecOps. You won’t need to create any intermediate data or layers.

Key Takeaways

Merge and Diff enable filesystem operations to be composed and decomposed in ways mirroring the relationships expressed in software-dependency DAGs. By allowing filesystem layers reuse, BuildKit can maximize its cache reuse of those layers. This improves performance and reproducibility.

More Applications

In addition to building your dependency DAGs more efficiently, Merge and Diff unlock plenty of other interesting possibilities. We’ll explore a few hypothetical ones here.

Importing Package DAGs

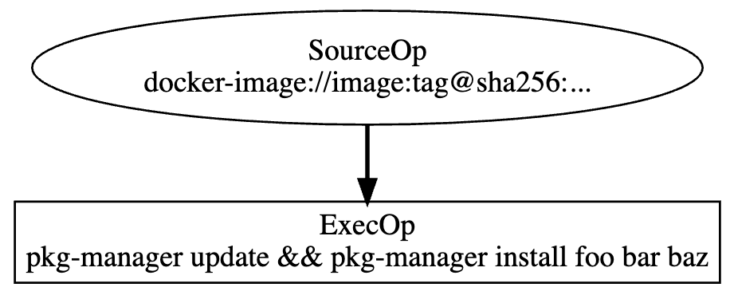

Many existing container-image builds install packages from a Linux distro package manager through an ExecOp (e.g. executing apt, apk, dnf, etc). LLB for this might resemble the following:

Similar patterns are common to language package managers like npm, pip, and Go modules.

This approach works, but suffers from the same caching problems described earlier. In this case, if one install package changes, it invalidates that install step. You must run the entire process again, even if the package DAG is only partially modified. This is another case where LLB doesn’t encode any knowledge about your actual dependency DAG, which disrupts BuildKit’s ability to cache data effectively.

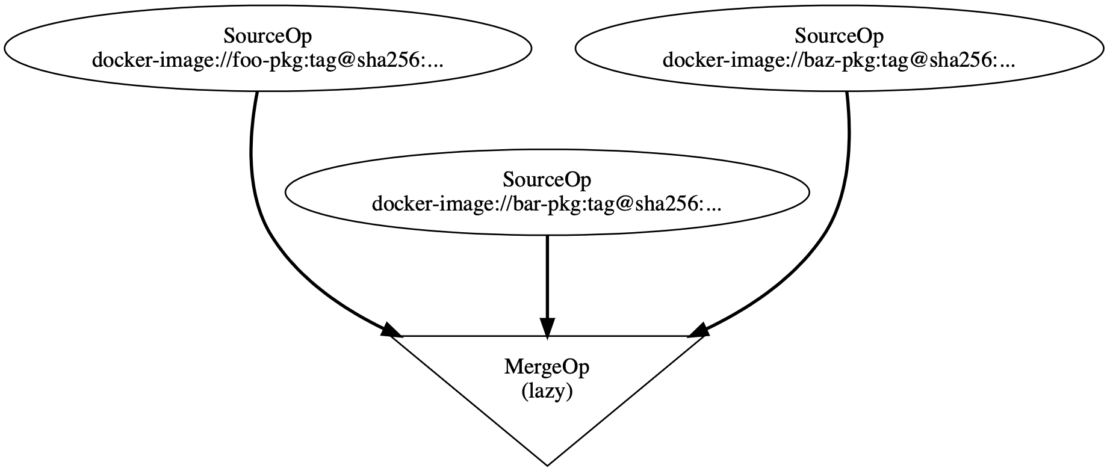

MergeOp unlocks new possibilities here. We can do the following:

- Take the list of desired packages and expand them into the full dependency DAG, including all transitive dependencies.

- Convert each package into an LLB state by downloading the package contents with an ExecOp. DiffOp may help you separate the artifacts. However, using a separate mount during the ExecOp may be adequate.

- Use MergeOp to combine those individual packages in topological order.

The LLB to implement this could look something like the following:

Note that we’re using separate ExecOp mounts instead of DiffOp, as described in the user guide. DiffOp is a workable solution if need be.

This solves the caching problems common to older approaches. You can import packages into a container image without duplicating data and work. If you run another build with a slightly different set of packages, BuildKit can re-use its cache for each package from the previous build.

Additionally, now that the package DAG has been compiled into LLB, you can extend it arbitrarily. Should you want to build your own software atop your imported packages, it’s as easy as taking your LLB from the above steps and adding your own ops.

Container Images as Lazy Package Merges

Say that someone used the above techniques to build or import a large package DAG, and then pushed each individual package in the DAG as a single layer image to a container registry.

Next, let’s say that someone has a list of packages that they want to convert into a container image. Using BuildKit, the “build” would consist of topologically sorting the dependency DAG of those packages and combining them with a MergeOp:

“Build” is in quotes because little actual work is required. Image vertices are lazy just like MergeOp and DiffOp, so the entire LLB DAG is lazy. In practice, this build consists of constructing a manifest for the container image, and pushing that to the registry. The input package images never need to be pulled down locally thanks to these lazy implementations. After pushing the manifest, anyone can grab that image from the registry and run it using the container runtime of their choice — just as they would with any other image.

In this scenario, the container image registry becomes a package cache and “builds” are just declarations of which packages must be present in a given image, pushed as a manifest to the registry.

A Few Wrenches

This is all pretty neat! However, there are some caveats:

- Package managers often support hooks that result in the execution of scripts during package installs. In many cases, you can disable these scripts for container image builds. When they’re strictly required, you can always represent them using ExecOps on top of MergeOps. However, that’ll mitigate some performance optimizations since you must create a merged filesystem locally.

- If a runtime package manager is required after image export, then the LLB will also need a way of merging a layer containing the package database. For example, this is necessary if you want to run apt commands in a Debian-based image — which may be useful within development environments.

- To convert a package DAG to LLB, you’ll need programmatic access to the package manager’s metadata database. This is easier said than done. You do have multiple options, such as parsing the CLI output or using library support, which you should evaluate on a case-by-case basis.

None of these issues are show-stoppers, but anyone creating tools while that utilize Merge and Diff for the above use cases should keep them in mind.

Thanks!

My interest in Merge+Diff stems from my own attempts at building complex package DAGs with BuildKit. However, other BuildKit users were also interested in the features — particularly Netflix — and contracted me to design and implement them. Their support has been greatly appreciated, in particular from engineers Edgar Lee and Aaron Lehmann. Both have provided invaluable feedback from start to finish.

Also, many thanks to the community of maintainers of BuildKit — especially Tõnis Tiigi, who’s also expressed interest in these features for a while. Merge+Diff was fairly complex to design and not straightforward during code-review, but thanks to him and everyone else involved, I think we’ve created some really powerful tools. These should unlock lots of interesting use cases moving forward.