February 21, 2025: Update Docker Hub

For the latest, please read Revisiting Docker Hub Policies: Prioritizing Developer Experience

November 5, 2024: Update – Important dates for new Docker subscription plans

To ensure a smooth transition, we’ve adjusted the timeline for our new subscription plans:

- Last Sale Date for Current Plans: Now December 9, 2024.

- New Subscription Plan Availability (Self-Serve): Now December 10, 2024.

- Changes to Personal Plan Consumption Pricing: Now effective December 10, 2024.

- Docker Hub Consumption Pricing: Starts March 1, 2025.

For more details, read the full announcement below or refer to the FAQ documentation.

—

At Docker, our mission is to empower development teams by providing the tools they need to ship secure, high-quality apps — FAST. Over the past few years, we’ve continually added value for our customers, responding to the evolving needs of individual developers and organizations alike. Today, we’re excited to announce significant updates to our Docker subscription plans that will deliver even more value, flexibility, and power to your development workflows.

Docker accelerating the inner loop

We’ve listened closely to our community, and the message is clear: Developers want tools that meet their current needs and evolve with new capabilities to meet their future needs.

That’s why we’ve revamped our plans to include access to ALL the tools our most successful customers are leveraging — Docker Desktop, Docker Hub, Docker Build Cloud, Docker Scout, and Testcontainers Cloud. Our new unified suite makes it easier for development teams to access everything they need under one subscription with included consumption for each new product and the ability to add more as they need it. This gives every paid user full access, including consumption-based options, allowing developers to scale resources as their needs evolve. Whether customers are individual developers, members of small teams, or work in large enterprises, the refreshed Docker Personal, Docker Pro, Docker Team, and Docker Business plans ensure developers have the right tools at their fingertips.

These changes increase access to Docker Hub across the board, bring more value into Docker Desktop, and grant access to the additional value and new capabilities we’ve delivered to development teams over the past few years. From Docker Scout’s advanced security and software supply chain insights to Docker Build Cloud’s productivity-generating cloud build capabilities, Docker provides developers with the tools to build, deploy, and verify applications faster and more efficiently.

Areas we’ve invested in during the past year include:

- The world’s largest container registry. To date, Docker has invested more than $100 million in Docker Hub, which currently stores over 60 petabytes of data and handles billions of pulls each month. We have improved content discoverability, in-depth image analysis, image lifecycle management, and an even broader range of verified high-assurance content on Docker Hub.

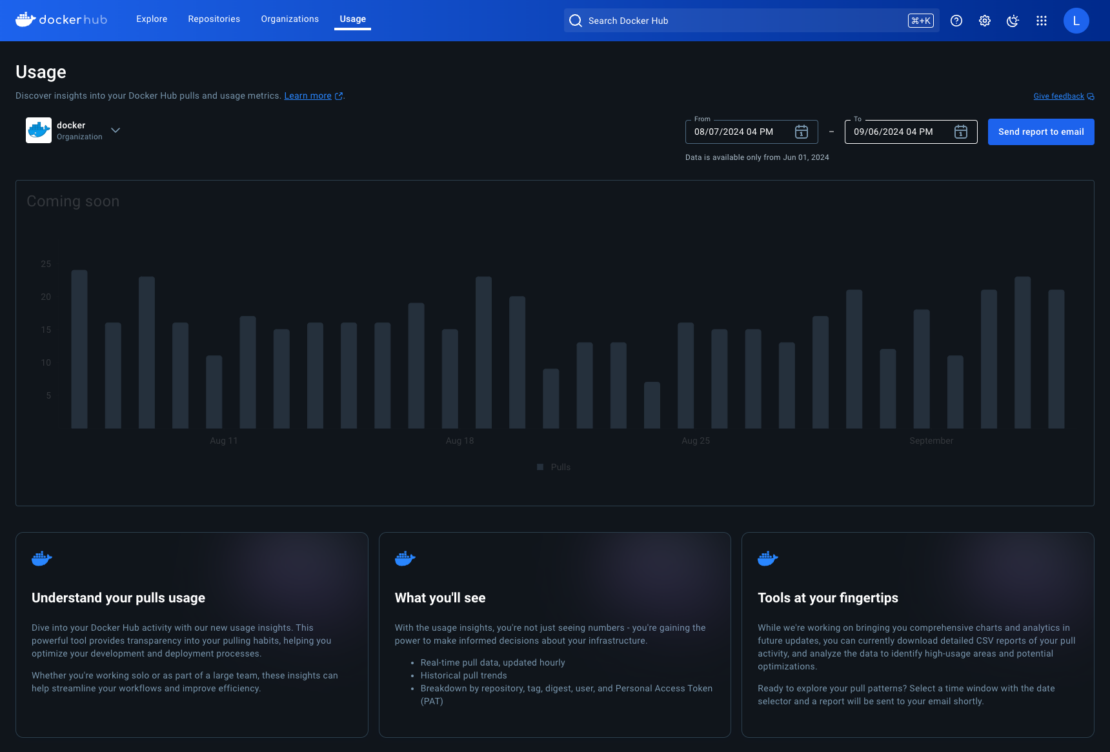

- Improved insights. From Builds View to inspecting GitHub Actions builds to Build Checks to Scout health scores, we’re providing teams with more visibility into their usage and providing insights to improve their development outcomes. We have additional Docker Desktop insights coming later this year.

- Securing the software supply chain. In October 2023, we launched Docker Scout, allowing developers to continuously address security issues before they hit production through policy evaluation and recommended remediations, and track the SBOM of their software. We later introduced new ways for developers to quickly assess image health and accelerate application security improvements across the software supply chain.

- Container-based testing automation. In December 2023, we acquired AtomicJar, makers of Testcontainers, adding container-based testing automation to our portfolio. Testcontainers Cloud offers enterprise features and a scalable, cloud-based infrastructure that provides a consistent Testcontainers experience across the org and centralizes monitoring.

- Powerful cloud-based builders. In January 2024, we launched Docker Build Cloud, combining powerful, native ARM & AMD cloud builders with shared cache that accelerates build times by up to 39x.

- Security, control, and compliance for businesses. For our Docker Business subscribers, we’ve enhanced security and compliance features, ensuring that large teams can work securely and efficiently. Role-based access control (RBAC), SOC 2 Type 2 compliance, centralized management, and compliance reporting tools are just a few of the features that make Docker Business the best choice for enterprise-grade development environments. And soon, we are rolling out organizational access tokens to make developer access easier at the organizational level, enhancing security and efficiency.

- Empowering developers to build AI applications. From introducing a new GenAI Stack to our extension for GitHub Copilot and our partnership with NVIDIA to our series of AI tips content, Docker is simplifying AI application development for our community.

As we introduce new features and continue to provide — and improve on — the world’s largest container registry, the resources to do so also grow. With the rollout of our unified suites, we’re also updating our pricing to reflect the additional value. Here’s what’s changing at a high level:

- Docker Business pricing stays the same but gains the additional value and features announced today.

- Docker Personal remains — and will always remain — free. This plan will continue to be improved upon as we work to grant access to a container-first approach to software development for all developers.

- Docker Pro will increase from $5/month to $9/month and Docker Team prices will increase from $9/user/month to $15/user/mo (annual discounts). Docker Business pricing remains the same.

- We’re introducing image pull and storage limits for Docker Hub. This will impact less than 3% of accounts, the highest commercial consumers. For many of our Docker Team and Docker Business customers with Service Accounts, the new higher image pull limits will eliminate previously incurred fees.

- Docker Build Cloud minutes and Docker Scout analyzed repos are now included, providing enough minutes and repos to enhance the productivity of a development team throughout the day.

- Implementing consumption-based pricing for all integrated products, including Docker Hub, to provide flexibility and scalability beyond the plans.

More value at every level

Our updated plans are packed with more features, higher usage limits, and simplified pricing, offering greater value at every tier. Our updated plans include:

- Docker Desktop: We’re expanding on Docker Desktop as the industry-leading container-first development solution with advanced security features, seamless cloud-native compatibility, and tools that accelerate development while supporting enterprise-grade administration.

- Docker Hub: Docker subscriptions cover Hub essentials, such as private and public repo usage. To ensure that Docker Hub remains sustainable and continues to grow as the world’s largest container registry, we’re introducing consumption-based pricing for image pulls and storage. This update also includes enhanced usage monitoring tools, making it easier for customers to understand and manage usage.

- Docker Build Cloud: We’ve removed the per-seat licenses for Build Cloud and increased the included build minutes for Pro, Team, and Business plans — enabling faster, more efficient builds across projects. Customers will have the option to add build minutes as their needs grow, but they will be surprised at how much time they save with our speedy builders. For customers using CI tools, Build Cloud’s speed can even help save on CI bills.

- Docker Scout: Docker Team and Docker Business plans will offer continuous vulnerability analysis for an unlimited number of Scout-enabled repositories. The integration of Docker Scout’s health scores into Docker Pro, Team, and Business plans helps customers maintain security and compliance with ease.

- Testcontainers Cloud: Testcontainers Cloud helps customers streamline testing workflows, saving time and resources. We’ve removed the per-seat licenses for Testcontainers Cloud under the new plans and included cloud runtime minutes for Docker Pro, Docker Team, and Docker Business, available to use for Docker Desktop or in CI workflows. Customers will have the option to add runtime minutes as their needs grow.

Looking ahead

Docker continues to innovate and invest in our products, and Docker has been recognized most recently as developers’ most used, desired, and admired developer tool in the 2024 Stack Overflow Developer Survey.

These updates are just the beginning of our ongoing commitment to providing developers with the best tools in the industry. As we continue to invest in our tools and technologies, development teams can expect even more enhancements that will empower them to achieve their development goals.

New plans take effect starting December 10, 2024. The Docker Hub plan limits will take effect on March 1, 2025. No charges on Docker Hub image pulls or storage will be incurred between December 10, 2024, and February 28, 2025. For existing annual and month-to-month customers, these new plan entitlements will take effect at their next renewal date that occurs on or after December 10, 2024, giving them ample time to review and understand the new offerings. Learn more about the new Docker subscriptions and see a detailed breakdown of features in each plan. We’re committed to ensuring a smooth transition and are here to support customers every step of the way.

Stay tuned for more updates or reach out to learn more. And as always, thank you for being a part of the Docker community.

Read the FAQ documentation to learn more.