We had a great turnout to our recent webinar “Demystifying VMs, Containers, and Kubernetes in the Hybrid Cloud Era” and tons of questions came in via the chat — so many that we weren’t able to answer all of them in real-time or in the Q&A at the end. We’ll cover the answers to the top questions in two posts (yes, there were a lot of questions!).

First up, we’ll take a look at IT infrastructure and operations topics, including whether you should deploy containers in VMs or make the leap to containers on bare metal.

VMs or Containers?

Among the top questions was whether users should just run a container platform on bare metal or run it on top of their virtual infrastructure — Not surprising, given the webinar topic.

- A Key Principle: one driver for containerization is to abstract applications and their dependencies away from the underlying infrastructure. It’s our experience that developers don’t often care about the underlying infrastructure (or at least they’d prefer not to). Docker and Kubernetes are infrastructure agnostic. We have no real preference.

- The goal – yours and ours: provide a platform that developers love to use, AND provide the operational and security tools required to keep your applications running in production and maintain the platform.

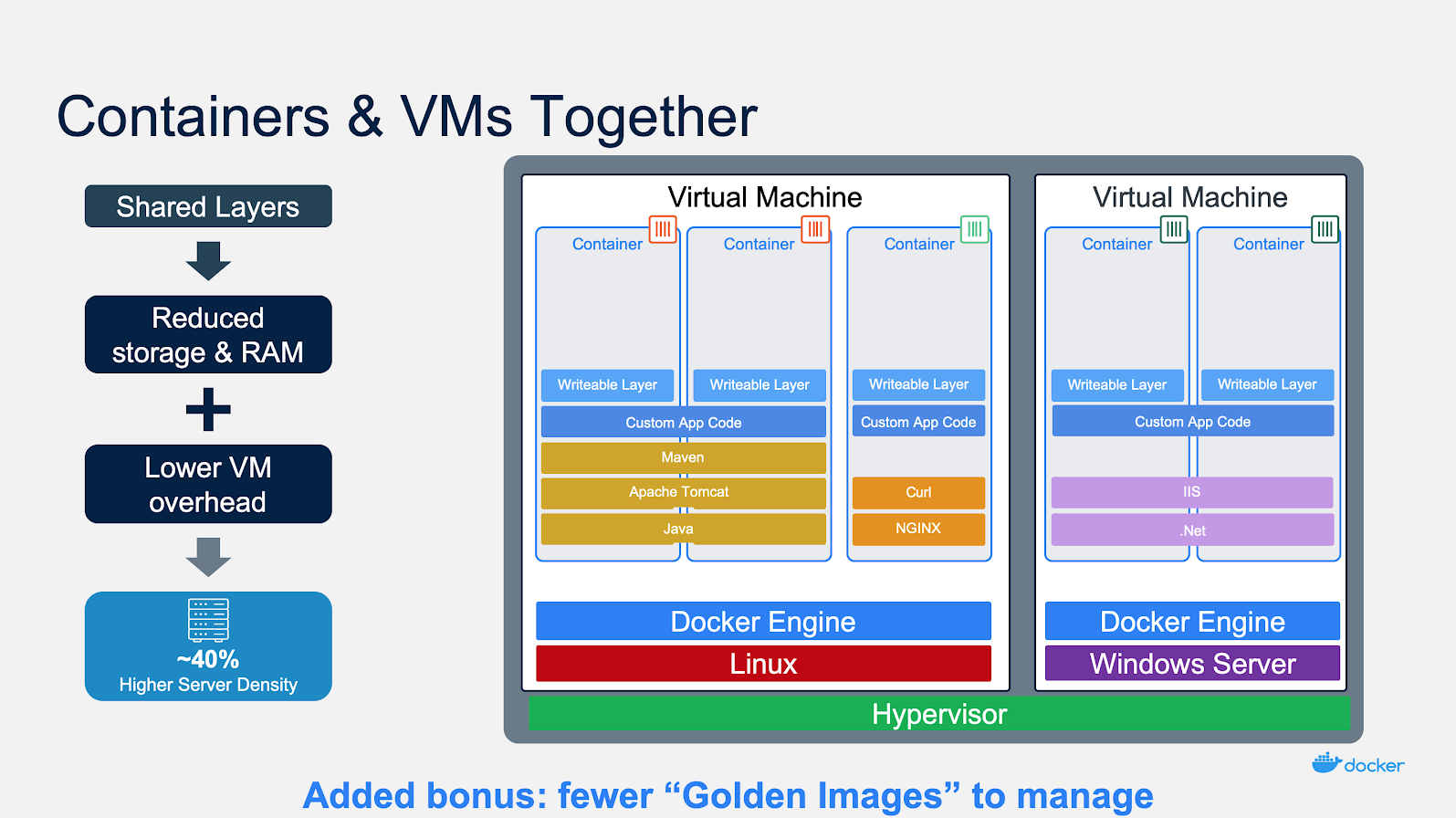

- So VMs or containers? It depends. If all of your operational expertise and tooling is built around virtualization, you might not want to change that right out of the gate when you deploy a container platform. On the other hand, if cost reduction or performance overhead is more important to you, maybe you’ll decide you don’t want to pay for a hypervisor anymore. The good news — when you containerize applications, you can likely reduce the number of VMs and underlying servers by at least 30 or 40 percent.

Whatever you do, avoid making a container platform decision that’s driven purely by your infrastructure of today. If developers feel like they don’t have flexibility, they quickly adopt their own tools, creating a second wave of shadow IT.

Containers and Networking

We didn’t go very deep on networking or storage on the webinar because they’ are topics than can easily fill multiple webinars on their own, but there were a few common questions.

How do you connect multiple containers together and expose the applications to external users and services?

- For simple applications, you can use the networking tools that are built right into both the Docker Engine and Kubernetes.

- If you’re running a container and want to map an external port to an internal port you can add a simple parameter to a command to open communications:

docker run --publish <external port>:<internal port> <image name> - Both Swarm and Kubernetes allow two containers to communicate with each other while keeping that connection hidden from external traffic. You can do that very simply on a Docker Engine or Swarm cluster using Docker Compose to define your services and their networks. In Kubernetes, you of course have similar capabilities.

What are Calico and Tigera, and how do they fit in to the networking design? What about NSX or other networking solutions?

- For more advanced applications or those running in production, you might want additional features and capabilities beyond what the built-in networking drivers support. When you have hundreds or thousands of containers, you’ll need a better way to handle routing, discovery, security and other network concerns at scale. The Kubernetes community supports more advanced networking plugins through a standardized Container Networking Interface (CNI). CNI plugins provide enhanced capabilities you won’t find in the default network drivers.

- Project Calico, by Tigera, is one of the most common open source CNI plugins you will find in Kubernetes, and it works for both Linux and Windows containers. Calico is maintained by Tigera who works closely with the Kubernetes community to define and contribute to the CNI standard. Docker Enterprise includes Calico as our “batteries included, but swappable” CNI plugin.

- For enterprises that need even greater security and management including auditing, reporting, greater scale, integration with service meshes, you might look to a commercial product like Tigera Secure.

- CNI ensures a standard networking interface that can support different ecosystem solutions. If you’re a VMware customer and already invested in NSX, you might go that route instead.

Sizing and Optimizing Infrastructure for Containers

We received quite a few questions about how to size a design for Docker & Kubernetes. They boiled down to two main questions:

What’s the maximum number of containers you can run on a single Docker host?

- The answer: it depends. Remember, containers are just processes that consume RAM and CPU directly from the host. Since there’s no hypervisor layer and additional OS between the application and the hos, a host should be able to run at least as many processes as containers as it did prior to containerization. In fact, most organizations end up running around 40% more work on a host because multiple applications can share the same base OS, and because many VMs are over-provisioned.

How do I size my environment for Docker/Kubernetes?

- As you start thinking about running containers in production, you should look at bringing in expertise to help guide you through this exercise. Similar to the previous answer, on average we see about a 40% reduction in the number of VMs, but that average is across a broad set of applications and you’ll want to learn how to estimate as you go forward and add more applications. We’ve seen customers do it on their own, but it takes time and you end up learning a lot from your own mistakes before you get it right. You can greatly accelerate your path with a little help.

Getting Started

We saved this one for last because this is by far the area with the most questions. Fortunately, they’re much easier questions to answer and many of the resources are free!

Where can I learn more about optimizing and managing containers?

- If you want to know more about the Docker Trusted Registry, Docker Kubernetes Service and the Universal Control Plane then you can get a free hosted trial of Docker Enterprise. Again, an introductory walkthrough is provided.

- Want classes and training with an instructor? We have that, too. There are classes for operators and developers; and classes for Kubernetes and Security.

Next week, we’ll cover questions about Kubernetes and Docker together, software pipelines and trusted content.