Introduction

In today’s fast-paced development world CTOs, dev managers and product managers demand quicker turnarounds for features and defect fixes. “No problem, boss,” you say. “We’ll just use containers.” And you would be right but once you start digging in and looking at ways to get started with containers, well quite frankly, it’s complex.

One of the biggest challenges is getting a toolset installed and setup where you can build images, run containers and duplicate a production kubernetes cluster locally. And then shipping containers to the Cloud, well, that’s a whole ‘nother story.

Docker Desktop and Docker Hub are two of the foundational toolsets to get your images built and shipped to the cloud. In this two-part series, we’ll get Docker Desktop set up and installed, build some images and run them using Docker Compose. Then we’ll take a look at how we can ship those images to the cloud, set up automated builds, and deploy our code into production using Docker Hub.

Docker Desktop

Docker Desktop is the easiest way to get started with containers on your development machine. The Docker Desktop comes with the Docker Engine, Docker CLI, Docker Compose and Kubernetes. With Docker Desktop there are no cloning of repos, running make files and searching StackOverflow to help fix build and install errors. You just need to download the image for your OS and double-click to get started installing. Let’s quickly walk through the process now.

Installing Docker Desktop

Docker Desktop is available for Mac and Windows. Navigate over to Docker Desktop homepage and choose your OS.

Once the download has completed, double click on the image and follow the instructions to get Docker Desktop installed. For more information on installing for your specific operating system, click the link below.

Docker Desktop UI Overview

Once you’ve downloaded and installed Docker Desktop and the whale icon has become steady you are all set. Docker Desktop is running on your machine.

Dashboard

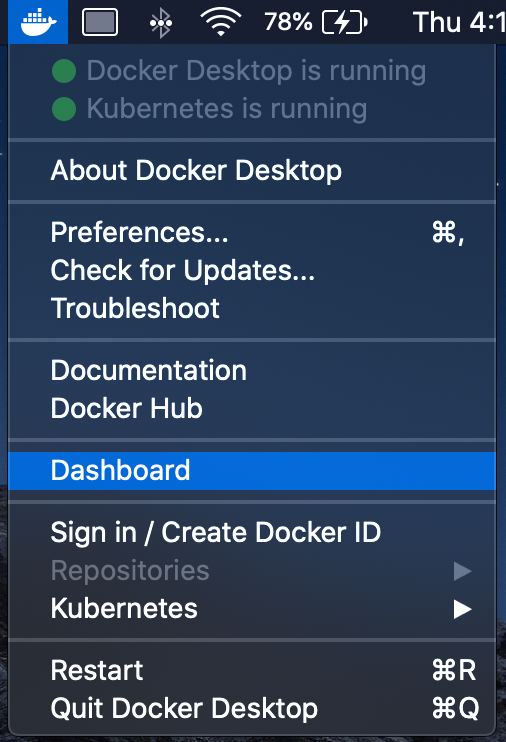

Now, let’s open the docker dashboard and take a look around.

Click on the Docker icon and choose “Desktop” from the dropdown menu.

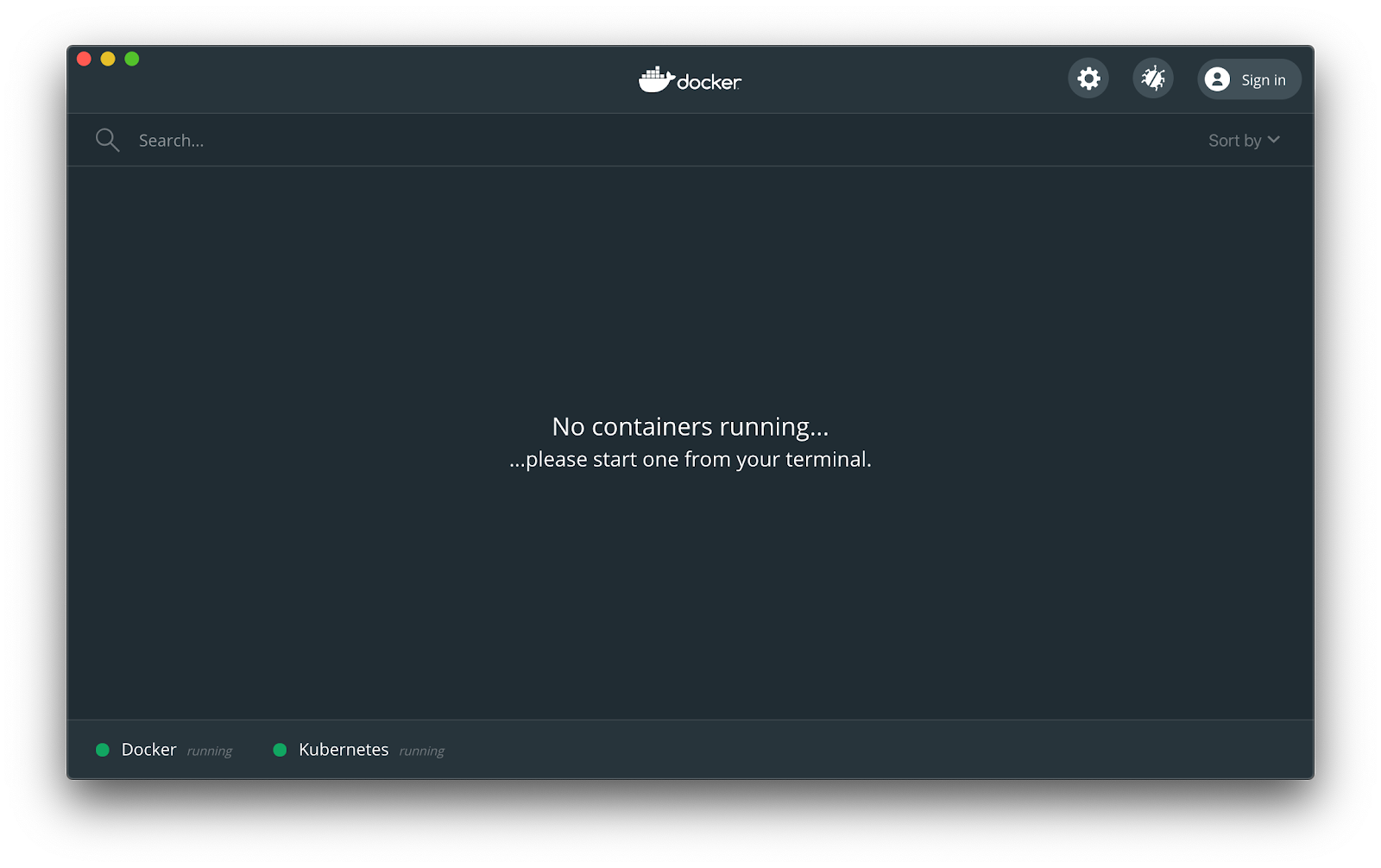

The following window should open:

As you can see, we do not have any containers running at this time. We’ll fix that in a minute but for now, let’s take a quick tour of the dashboard.

Login with Docker ID

The first thing we want to do is login with our Docker ID. If you do not already have a one, head over to Docker Hub and sign up. Go ahead, I’ll wait. 😁

Okay, in the top right corner of the Dashboard, you’ll see the Sign in button. Click on that and enter your Docker ID and Password. If instead, you see your Docker ID, then you are already logged in.

Settings

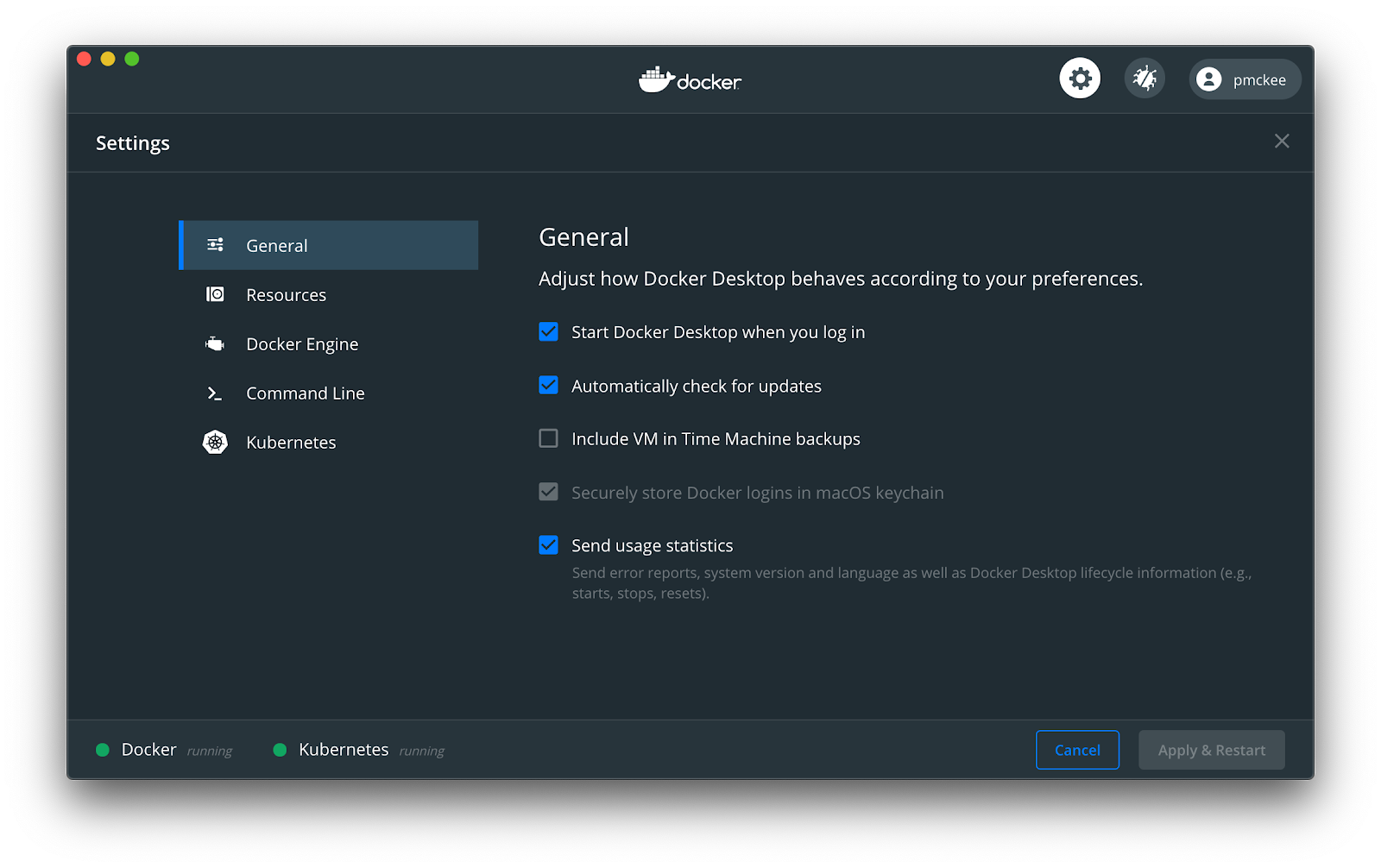

Now let’s take a look at the settings you can configure in Docker Desktop. Click on the settings icon in the upper right hand corner of the window and you should see the Settings screen:

General

Under this tab is where you’ll find the general settings such as starting Docker Desktop when you log in to your machine, automatically checking for updates, include the Docker Desktop VM in backups, and whether Docker Desktop will send usage statistics to Docker.

These default settings are fine. You really do not need to change them unless you are doing advanced image builds and need to backup your working images. Or you want to have more control over when Docker Desktop is started.

Resources

Next let’s take a look at the Resources tab. On this tab and its sub-tabs is where you can control the resources that are allocated to your Docker environment. These default settings are sufficient to get started. If you are building a lot of images or running a lot of containers at once, you might want to bump up the number of CPUs, Memory and RAM. You can find more information about these settings in our documentation.

Docker Engine

If you are looking to make more advanced changes to the way the Docker Engine runs, then this is the tab for you. The Docker Engine daemon is configured using a daemon.json file located in /etc/docker/daemon.json on Linux systems. But when using Docker Desktop, you will add the config settings here in the text area provided. These settings will get passed to the Docker Engine that is used with Docker Desktop. All available configurations can be found in the documentation.

Command Line

Turning on and off experimental features for the CLI is as simple as toggling a switch. These features are for testing and feedback purposes only. So don’t rely on them for production. They could be changed or removed in future builds.

You can find more information about what experimental features are included in your build on this documentation page.

Kubernetes

Docker Desktop comes with a standalone Kubernetes server and client and is integrated with the Docker CLI. On this tab is where you can enable and disable this Kubernetes. This instance of Kubernetes is not configurable and comes with one single-node cluster.

The Kubernetes server runs within a Docker container and is intended for local testing only. When Kubernetes support is enabled, you can deploy your workloads, in parallel, on Kubernetes, Swarm, and as standalone containers. Enabling or disabling the Kubernetes server does not affect your other workloads.

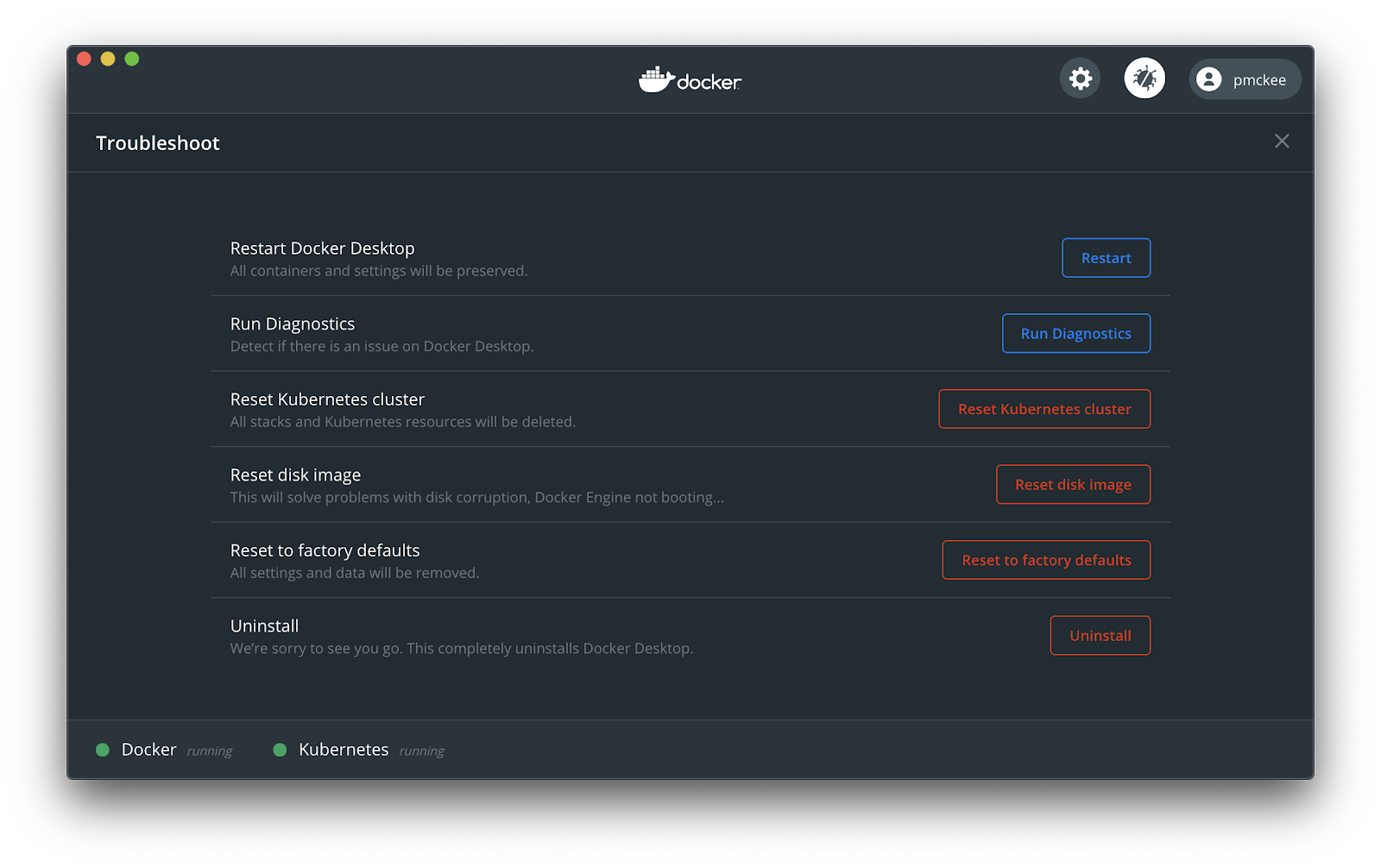

Troubleshoot

Let’s move on to the troubleshoot screen. Click on the but icon in the upper right hand corner of the window and you should see the following Troubleshoot screen:

Here is where you can restart Docker Desktop, Run Diagnostics, Reset features and Uninstall Docker Desktop.

Building Images and Running Containers

Now that we have Docker Desktop installed and have a good overview of the UI, let’s jump in and create a Docker image that we can run and ship to Hub.

Docker consists of two major components: the Engine that runs as a daemon on your system and a CLI that sends commands to the daemon to build, ship and run your images and containers.

In this article, we will be primarily interacting with Docker through the CLI.

Difference between Images and Containers

A container is a process running on your system just like any other process. But the difference between a “container” process and a “normal” process is that the container process has been sandboxed or isolated from other resources on the system.

One of the main pieces of this isolation is the filesystem. Each container is given its own private filesystem which is created from the Docker image. This Docker image is where everything is packaged for the processes to run – code, libraries, configuration files, environment variables and runtime.

Creating a Docker Image

I’ve put together a small node.js application that I’ll use for demonstration purposes but any web application would follow the same principles that we will be talking about. Feel free to use your own application and follow along.

First, let’s clone the application from GitHub.

$ git clone git@github.com:pmckeetx/projectz.git

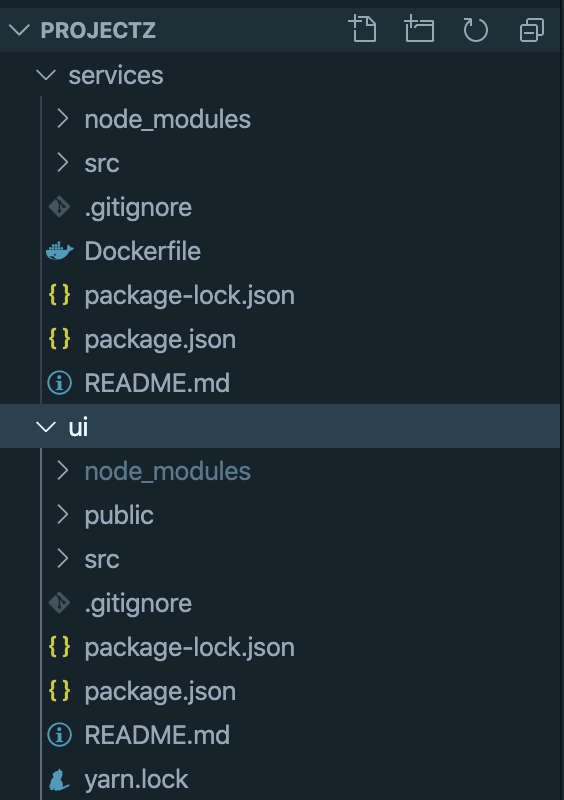

Open the project in your favorite text editor. You’ll see that the application is made up of a UI written in React.js and a backend service written in Node.js and Express.

Let’s install the dependencies and run the application locally to make sure everything is working.

Open your favorite terminal and cd into the root directory of the project.

$ cd services

$ npm install

Now let’s install the UI dependencies.

$ cd ../ui

$ npm install

Let’s start the services project first. Open a new terminal window and cd into the services directory. To run the application execute the following command:

$ npm run start

In your original terminal window, start the UI. To start the UI run the following command:

$ npm run start

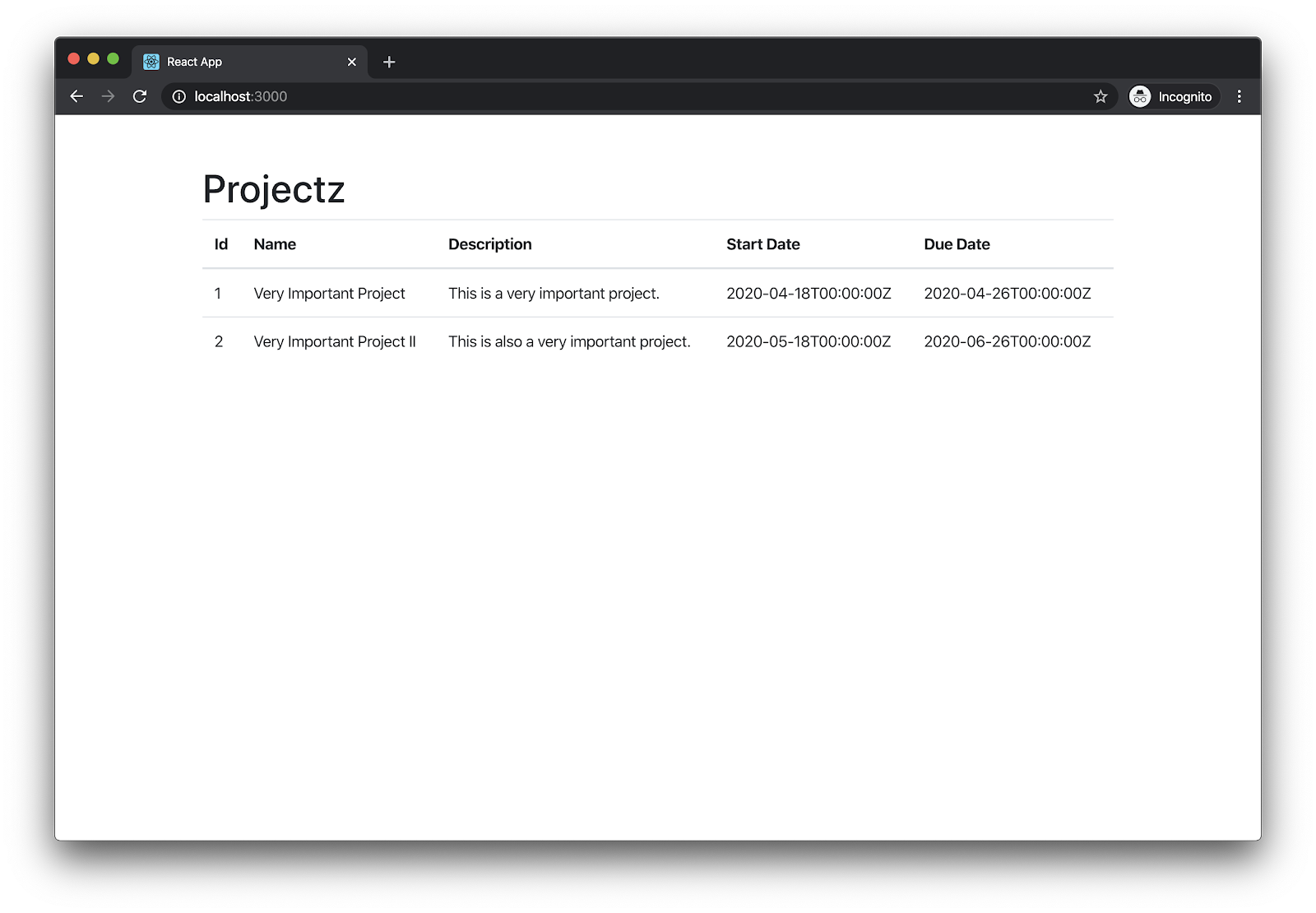

If a browser window is not opened for you automatically, fire up your favorite browser and navigate to http://localhost:3000/

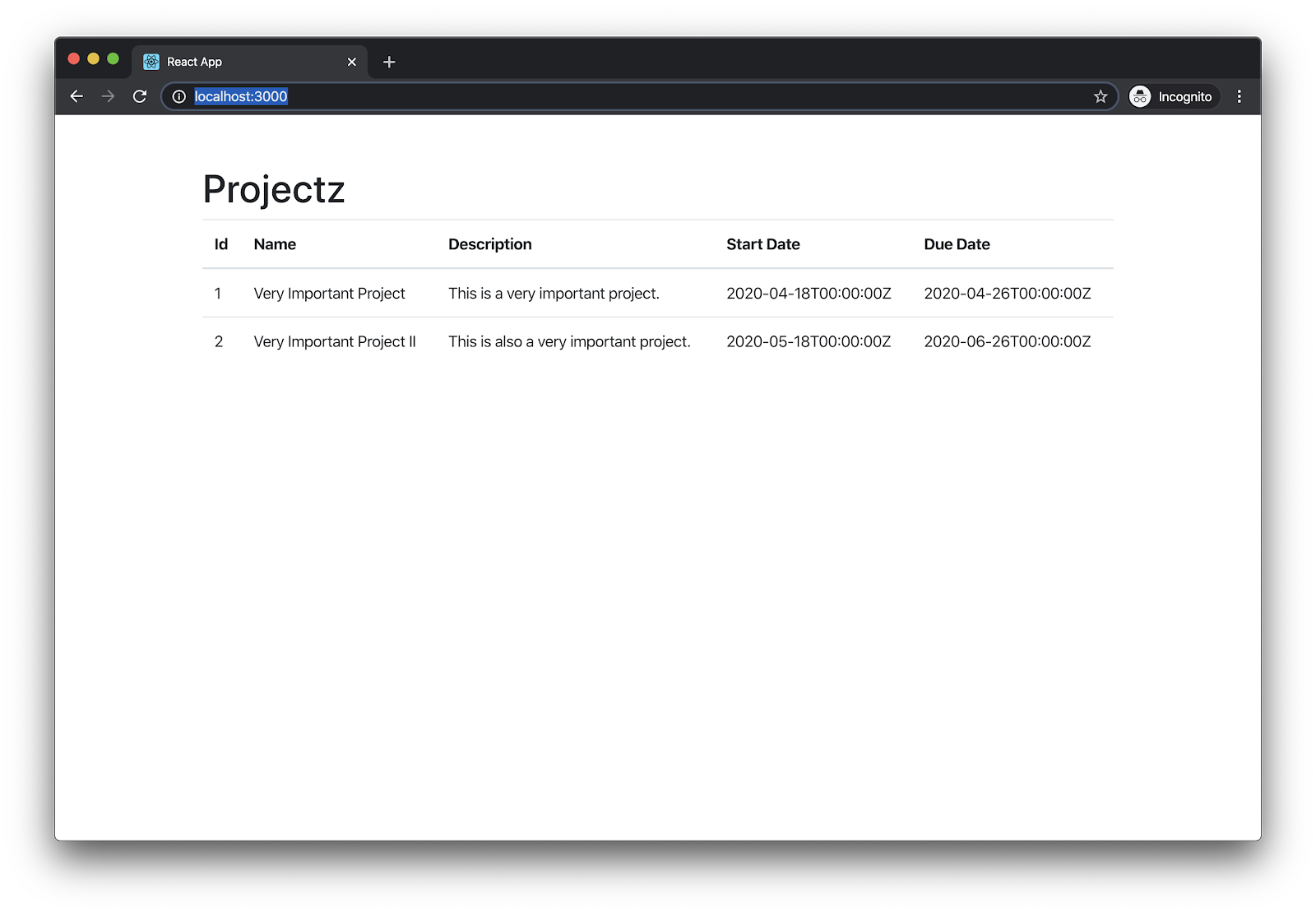

You should see the following screen:

If you do not see a list of projects or get an error message, make sure you have the services project running.

Okay, great, we have everything set up and running.

Dockerfile

Before we build our images, let’s take a quick look at the Dockerfile we’ll use to build the services image.

In your texteditor, open the Dockerfile for the services project. You should see the following.

FROM node:lts

ARG NODE_ENV=production

ENV NODE_ENV $NODE_ENV

WORKDIR /code

ARG PORT=80

ENV PORT $PORT

COPY package.json /code/package.json

COPY package-lock.json /code/package-lock.json

RUN npm ci

COPY . /code

CMD [ "node", "src/server.js" ]A Dockerfile is basically a shell script that tells Docker how to build your image.

FROM node:ltsThe first line in the file tells Docker that we will be using the long-term-support of node.js as our base image.

ARG NODE_ENV=production

ENV NODE_ENV $NODE_ENVNext, we create a build arg and set the default value to be “production” and then set NODE_ENV environment variable to what was set in the NODE_ENV build arg.

WORKDIR /codeNow we tell Docker to create a directory named code and use it as our working directory. The following COPY and RUN commands will be performed in this directory:

ARG PORT=80

ENV PORT $PORTHere we are creating another build argument and assigning 80 as the value. Then this build argument is used to set the PORT environment variable.

COPY package.json /code/package.json

COPY package-lock.json /code/package-lock.json

RUN npm ciThese COPY commands will copy the package*.json files into our image and will be used by the npm ci to install node.js dependencies.

COPY . /codeNow we’ll copy our application code into the image.

Quick Note: Dockerfiles are executed from top to bottom. Each command will first be checked against a cache. If nothing has changed in the cache, Docker will use the cache instead of running the command. On the other hand, if something has changed, the cache will be invalidated and all subsequent cache layers will also be invalidated and corresponding commands will be run. So if we want to have the fastest build possible and not invalidate the entire cache on every image build, we will want to place the commands that change the most as far to the bottom of the Dockerfile as possible.

So for example, we want to copy the package.json and package-lock.json files into the image before we copy the source code because the source code will change a lot more often than adding modules to the package.json file.

CMD [ "node", "src/server.js" ]The last line in our Dockerfile tells Docker what command we would like to execute when our image is started. In this case, we want to execute the command: node src/server.js

Building the image

Now that we understand our Dockerfile. Let’s have Docker build the image.

In the root of the services directory, run the following command:

$ docker build --tag projectz-svc .

This tells Docker to build our image using the Dockerfile located in the current directory and then tag that image with projectz-svc

You should see a similar output when Docker has finished building the image.

Successfully built 922d1db89268

Successfully tagged projectz-svc

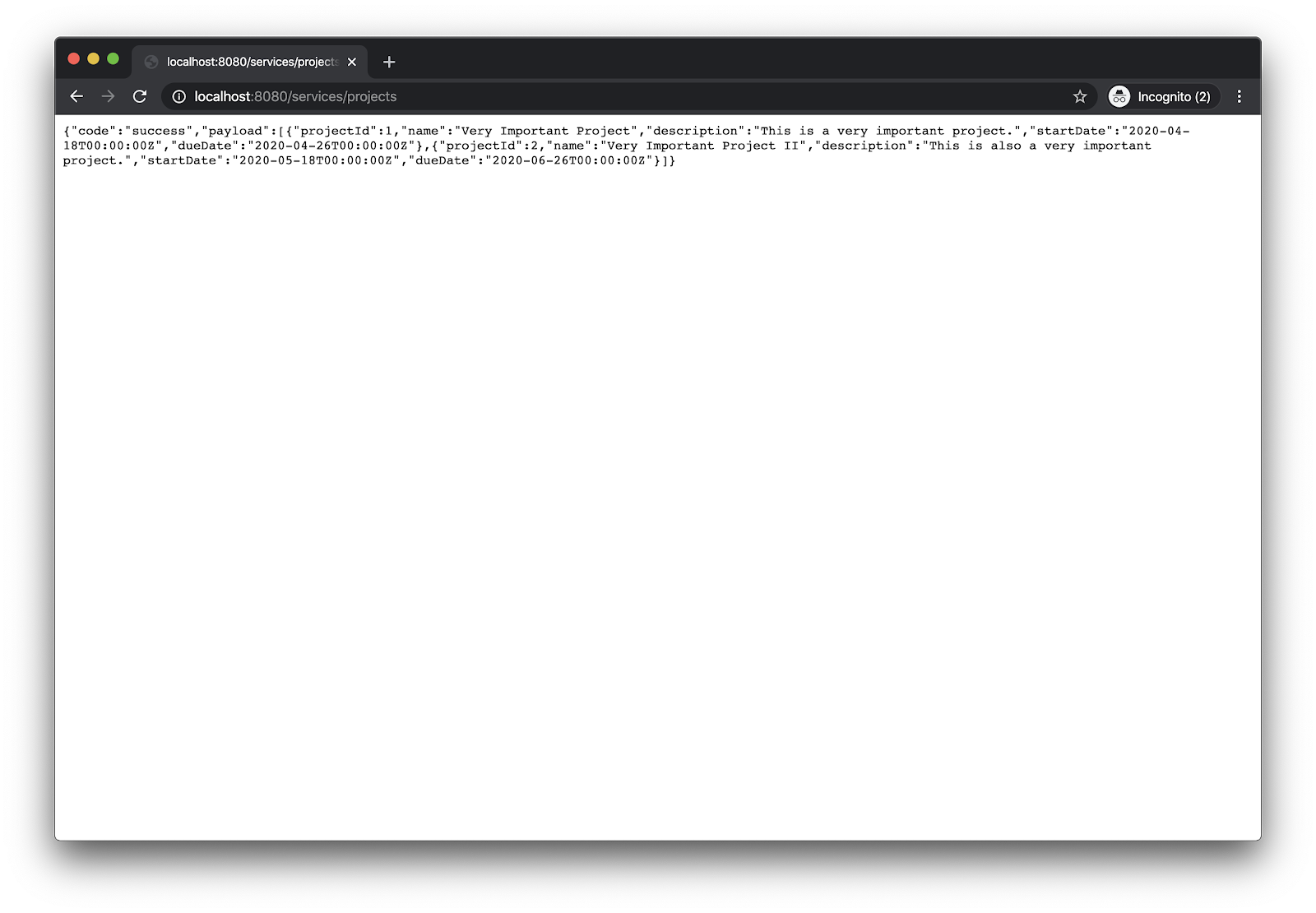

Now let’s run our container and make sure we can connect to it. Run the following command to start our image and connect port 8080 to port 80 inside our container.

$ docker run -it --rm --name services -p 8080:80 projectz-svc

You should see the following printed to the terminal:

Listening on port: 80

Open your browser and navigate to http://localhost:8080/services/projects

If all is well, you will see a bunch of json returned in the browser and “GET /services/projects” printed on in the terminal.

Let’s do the same for the front-end UI. I won’t walk you through the Dockerfile at this time but we will revisit when we look at pushing to the Cloud.

Navigate in your terminal into the UI source directory and run the following commands:

$ docker build --tag projectz-ui .

$ docker run -it --rm --name ui -p 3000:80 projectz-ui

Again, open your favorite browser and navigate to http://localhost:3000/

Awesome!!!

Now, if you remember at the beginning of the article we took a look at the Docker Desktop UI. At that time we did not have any containers running. Open the Docker Dashboard by clicking on the whale icon (![]() ) either in the Notification area (or System Tray) on Windows or from the menu bar on Mac.

) either in the Notification area (or System Tray) on Windows or from the menu bar on Mac.

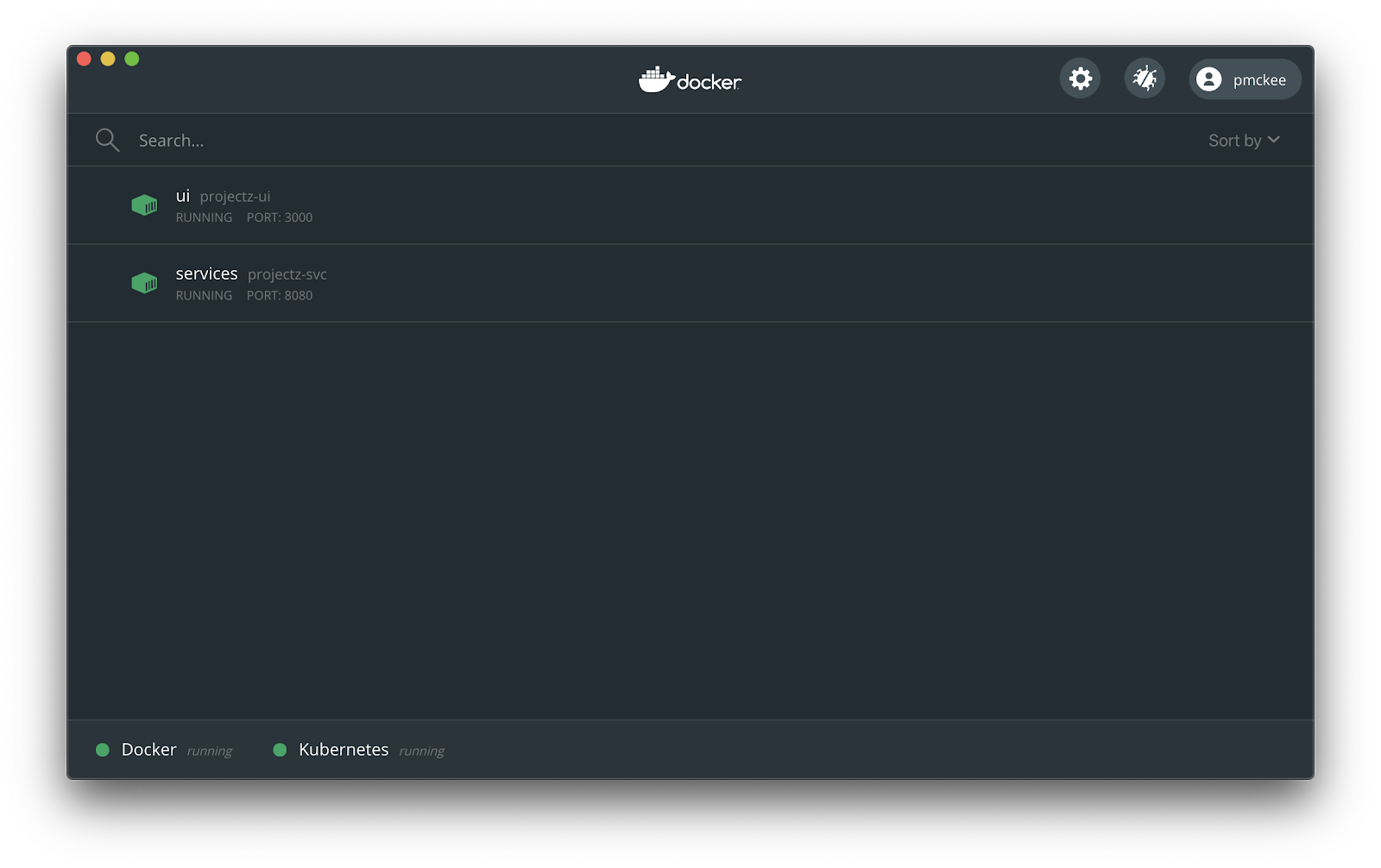

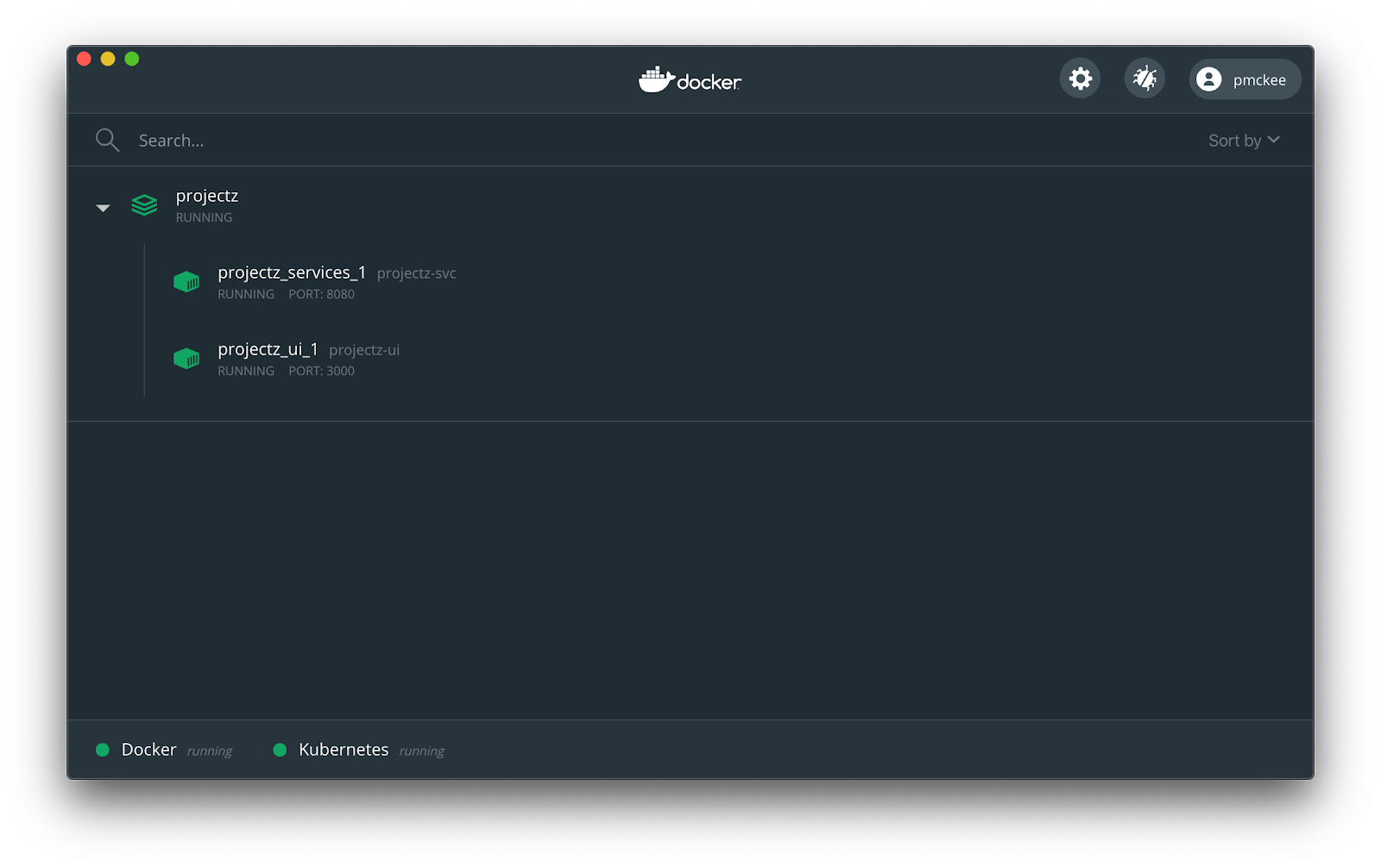

We can now see our two containers running:

If you do not see them running, re-run the following commands in your terminal.

$ docker run -it --rm --name services -p 8080:80 projectz-svc

$ docker run -it --rm --name ui -p 3000:80 projectz-ui

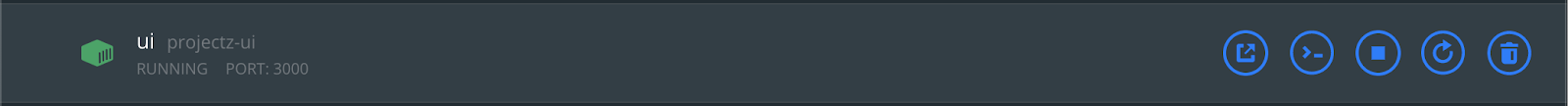

Hover your mouse over one of the images and you’ll see buttons appear.

With these buttons you can do the following:

- Open in a browser – If the container exposes a port, you can click this button and open your application in a browser.

- CLI – This button will run the

docker execin a terminal for you. - Stop/Start – You can start and stop your container.

- Restart – You are also able to restart your container.

- Delete – You can also remove your container.

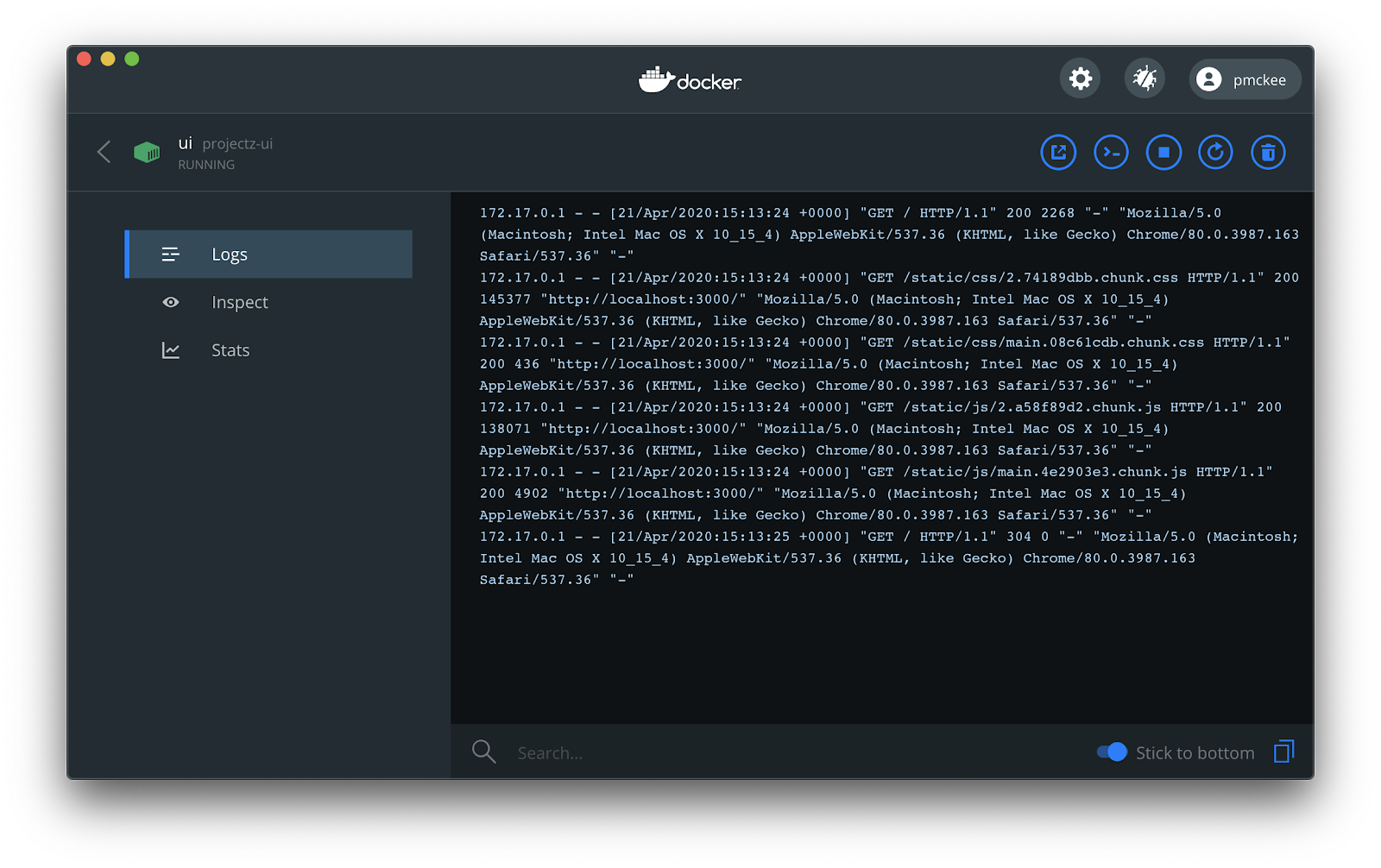

Now click on the ui container to view its details page.

On the details screen, we are able to view the container logs, inspect the container, and view stats such as CPU Usage, Memory Usage, Disk Read/Writes, and Networking I/O.

Docker-compose

Now let’s take a look at how we can do this a little easier using docker-compose. Using docker-compose, we can configure both our applications in one file and start both of them with one command.

If you take a look in the root of our git repo, you’ll see a docker-compose.yml file. Open that file in your text editor and let’s have a look.

version: "3.7"

services:

ui:

image: projectz-ui

build:

context: ./ui

args:

NODE_ENV: production

REACT_APP_SERVICE_HOST: http://localhost:8080

ports:

- "3000:80"

services:

image: projectz-svc

build:

context: ./services

args:

NODE_ENV: production

PORT: "80"

ports:

- "8080:80"

This file combines all the parameters we passed to our two earlier commands to build and run our services.

If you have not done so already, stop and remove the services and ui containers that we start earlier.

$ docker stop services

$ docker stop ui

Now let’s start our application using docker-compose. Make sure you are in the root of the git repo and run the following command:

$ docker-compose up --build

Docker-compose will build our images and tag them. Once that is finished, compose will start two containers – one for the UI application and one for the services application.

Open up the Docker Desktop dashboard screen and you will now be able to see we have projectz running.

Expand the projectz and you will see our two containers running:

If you click on either one of the containers, you will have access to the same details screens as before.

Docker-compose gives us huge improvements over running each individual docker build and docker run commands as before. Just imagine if you had 10s of services or even 100s of micro-services running your application and having to start each individual container one at a time. With docker-compose, you can configure your application, build arguments and start all services with one command.

Next Steps

For more on how to use Docker Desktop, check out these resources:

Stay tuned for Part II of this series where we’ll use Docker Hub to build our images, run automated tests, and push our images to the cloud.