Compose. Build. Deploy.

Agents from Dev to Prod, Simplified

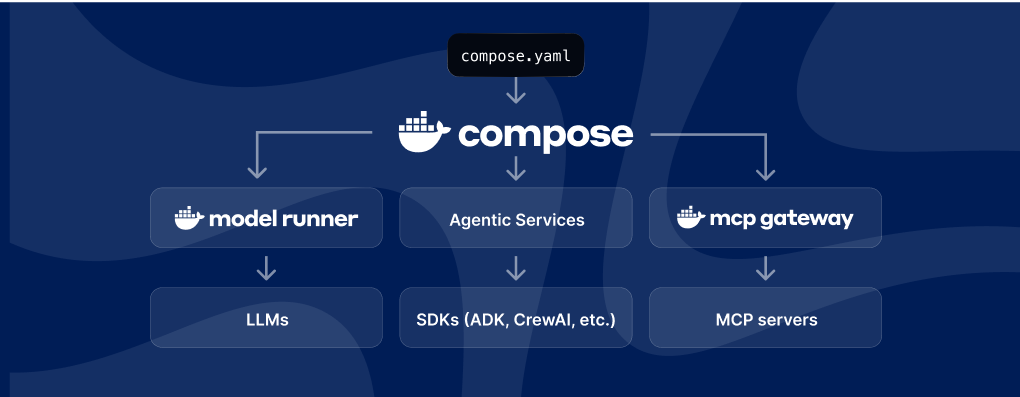

Docker Compose holds it all together.

Build with the SDKs you know and love

Run your favorite LLMs and connect to MCP servers

Deploy anywhere: local, Docker Cloud, or the cloud of your choice

Break free of local limits

Natively docker

Priced for development

Uniting the ecosystem

Docker is driving industry standards for building agents

Docker is the place to build AI agents, with seamless integration and support for today’s most powerful tools you already know and love.

Start building agents with your go-to SDKs

The Easiest Way to Build AI Agents Starts with Docker

Start Fast. Learn Faster.

Explore popular models, orchestration tools, databases, and MCP servers in Docker Hub

Package, Share, and Scale AI

Simplify AI experimentation and deployment, Docker Model Runner converts LLMs into OCI-compliant containers

Secure by Default

Integrated gateways and security agents help teams stay compliant, auditable, and production-ready from day one.

Open and Extensible

Use the SDKs you love – LangGraph, CrewAI, Spring AI, and more. Docker embraces ecosystem diversity.

No New Tools. Just New Power.

Keep your same workflow, now ready for intelligent applications.

Local to Cloud Made Simple

Build and test locally, deploy to Docker Offload or your cloud of choice – no infrastructure hurdles.

New Docker Innovations for Agent Development Are Here

Docker Offload

Gives developers access to remote Docker engines, including GPUs, while using the same Docker Desktop they already use.

Learn more

MCP Gateway

MCP Gateway acts as a unified control plane, consolidating multiple MCP servers into a single, consistent endpoint for your AI agents.

Learn more

Model Runner

We’ve integrated it with Compose, made it possible to run in cloud and expanded support for more LLMs, so your agentic apps run smarter and smoother from day one.

Learn more

Hub MCP Server

Docker Hub MCP Server is a Model Context Protocol (MCP) server that connects Docker Hub APIs to LLMs.

Learn more

Gordon

Gordon, our AI assistant, represents the future of working with Docker. Already available to help with tasks like containerizing your apps, Gordon now includes new DevSecOps capabilities in this beta release.

Learn more

Compose

Docker Compose simplifies agents, from development to production

Learn more